This Week

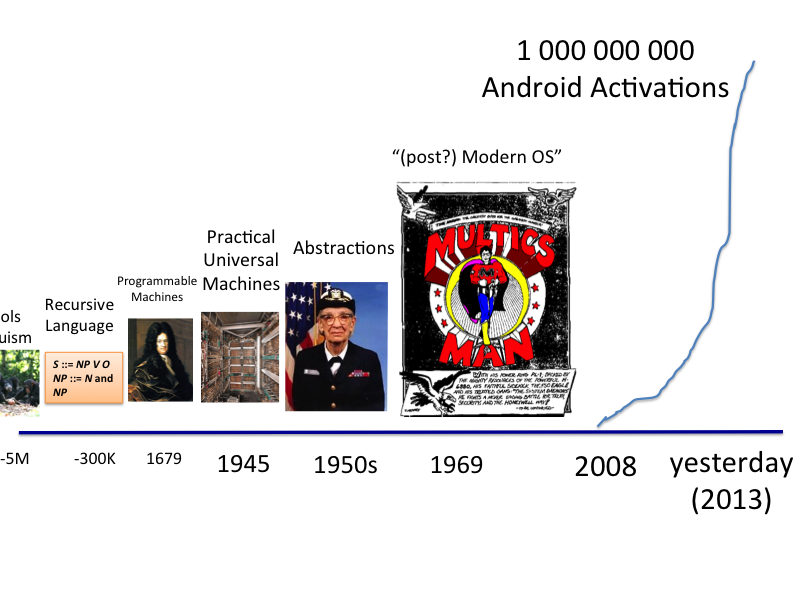

Two momentous events in operating systems happened this week:

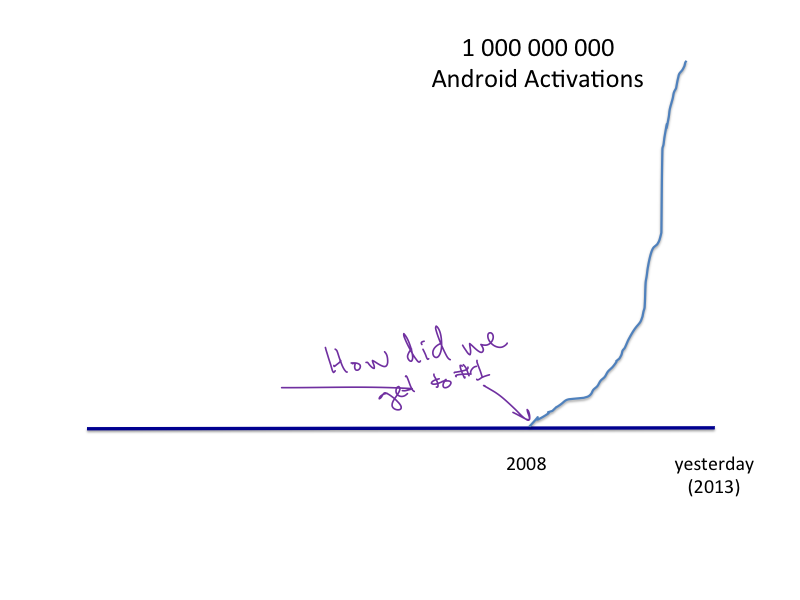

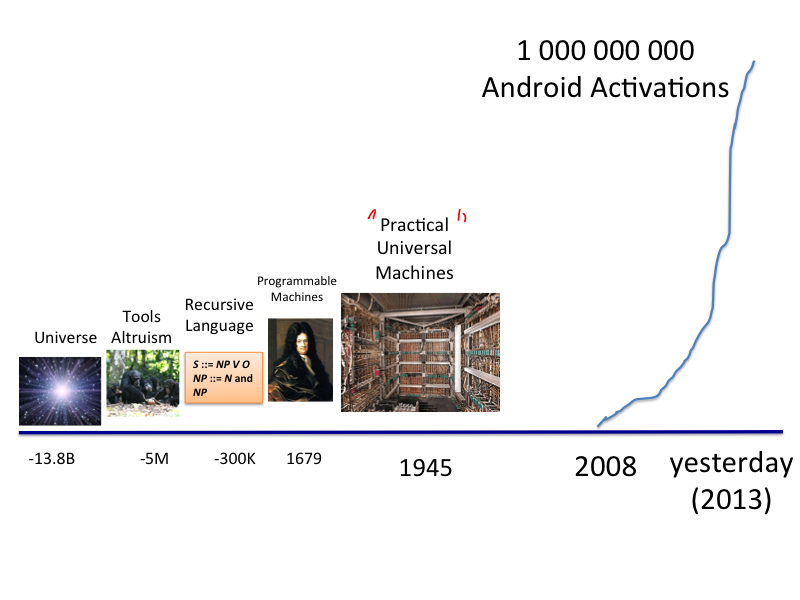

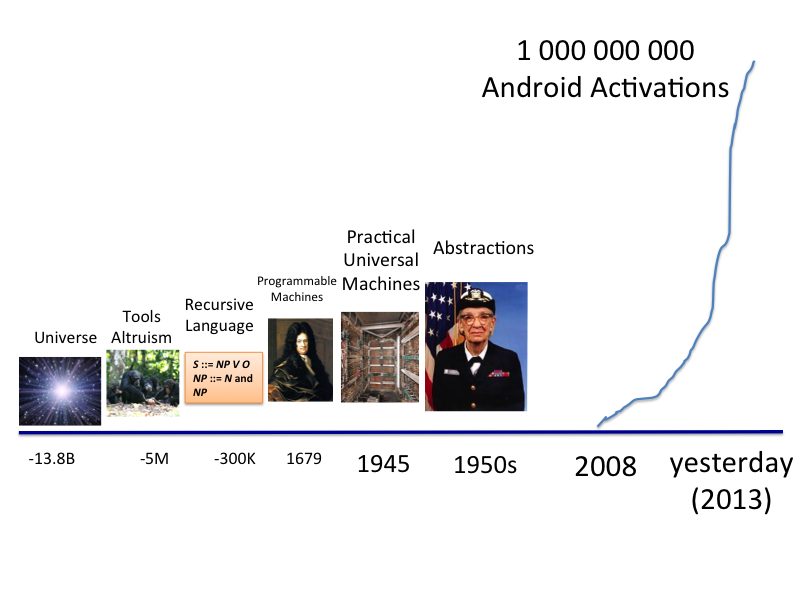

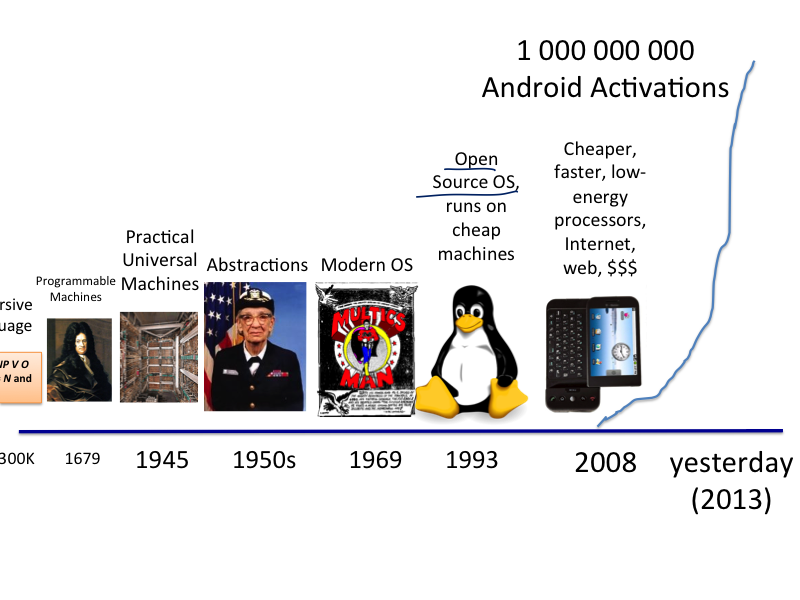

- Billionth Android device was activated.

- Microsoft bought Nokia's phone business (for a relative pittance).

How did we get here?

|

The long-awaited HTC Dream, the first commercial handset running Google’s Android operating system, will be coming to T-Mobile as the G1 for $179 on October 22nd. Featuring a 3-inch touchscreen, internet navigation buttons and a full QWERTY keypad, the smartphone market has finally broken free of Symbian, Windows Mobile and the sweet clutches of fruit companies. Mark Wilson, Gizmondo, 23 Sept 2008 |

|

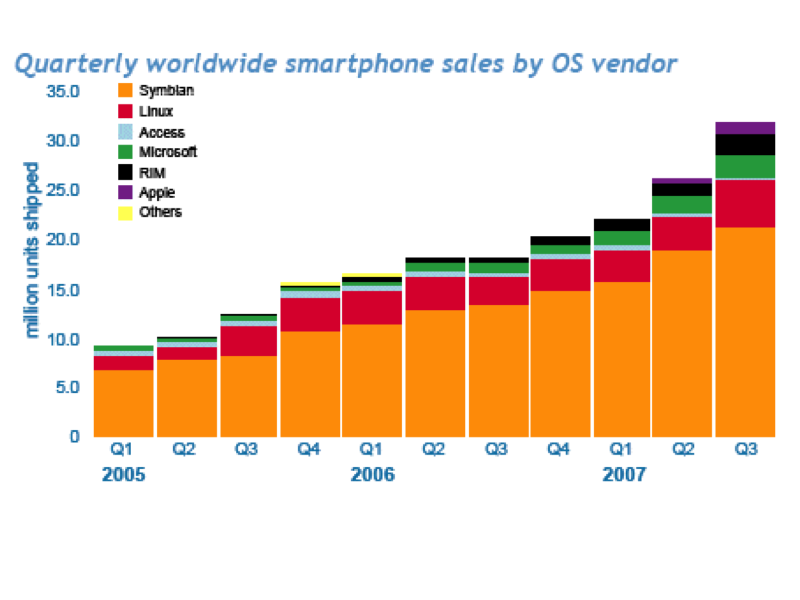

Data from Symbian Market Round-Up (Symbian was a partnership of several companies, dominated by Nokia.) |

|

Google Just Passed 1 Billion Android Activations Sundar Pichai's announcements Microsoft paid $7.2B ((all of) Nokia was worth about $40B five years ago) and lost $15B in market value on the news. |

|

One Billion Android activations in less than five years! That's one for every seven humans. (I have about 8, so obviously they are not fairly distributed.) |

How did we get to the first one?

Operating Systems Pre-History

How far back do we need to go to understand it?

Nearly everything that happens is the result of things that happened in the past. Let's start at the very beginning...

|

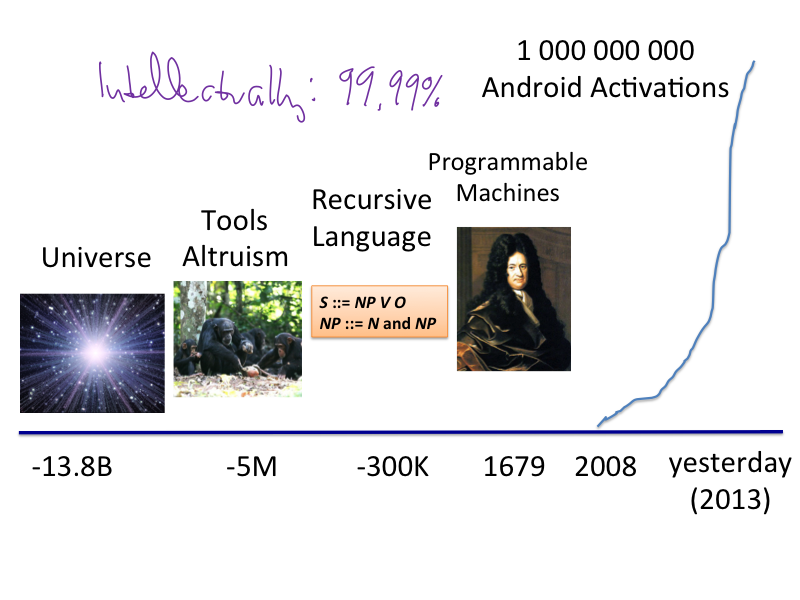

Tools amplify our natural abilities. Tools go back long before humans: Humans and Chimpanzees Learned to Use Tools from a Common Ancestor Android grew from open tools that were developed primarily for altruistic reasons. Our ancestors have been altruistic for some time: Spontaneous Altruism by Chimpanzees and Young Children Buildling complex systems requires working in large teams. Insects figured that out long before primates evolved. |

|

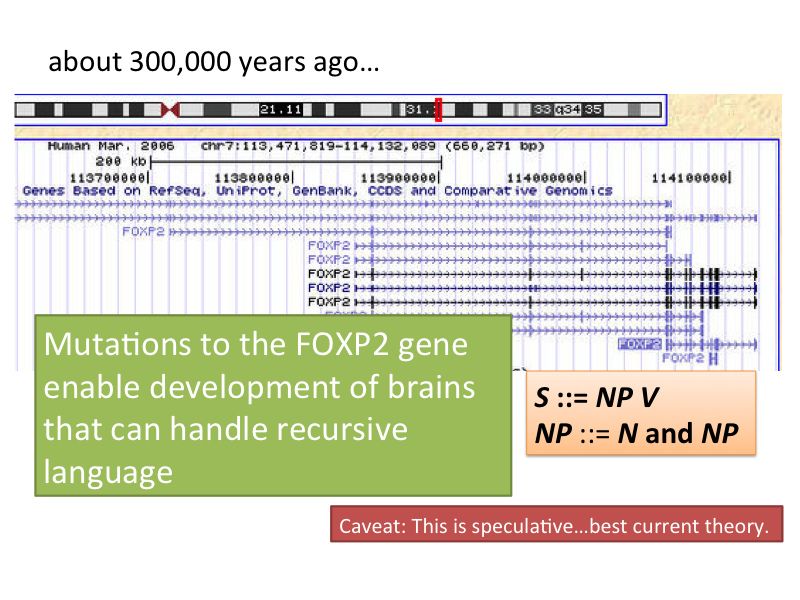

Homanids evolved really powerful brains. Speculatively, the most important step was a mutation to the FOXP2 gene that enabled the ability to use recursive languages. Unlike the finite languages, non-human primates are able to learn, recursive languages allow infinitely many things to be expressed and understood from a small number of rules. As a computer scientist, I really hope this is true, but the science is very shaky and like most things in biology, the real story is probably much more complex. The Derived FOXP2 Variant of Modern Humans Was Shared with Neandertals, The FOXP2 Story |

Bigger brains and language enabled us to communicate and share knowledge and operate in larger groups, and get better at dominating our environment. Eventually, we got so good at it to develop cooking so we didn't need to spend most of our time chewing, and agriculture so we didn't need to spend most of our time chasing food around and could invest in permanent settlements, growing to civilizations large enough to require complex taxation systems (and motivating the development of the mathematics needed to implement them).

By a few thousand years ago, we had gotten so absurdly and unimaginably efficient as a species that we could afford to have a handful of members who were completely unproductive - not producing a single calorie of food, or even ruling over others who did. So rich, by the 1500s, in fact, to be able to support people with the two most unproductive roles imaginable: professors and lawyers! Our next major advance was done by someone who was both: Gottfried Wilhelm von Leibniz.

|

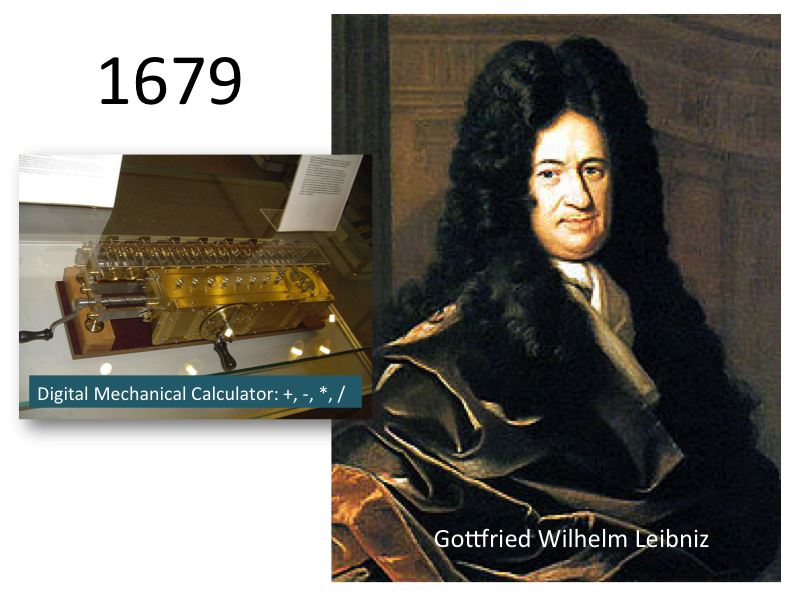

By the 1600s, humans had started to make significant computing machines. Leibniz built machines that could do encryption and perform all the basic arithmetic operations. |

|

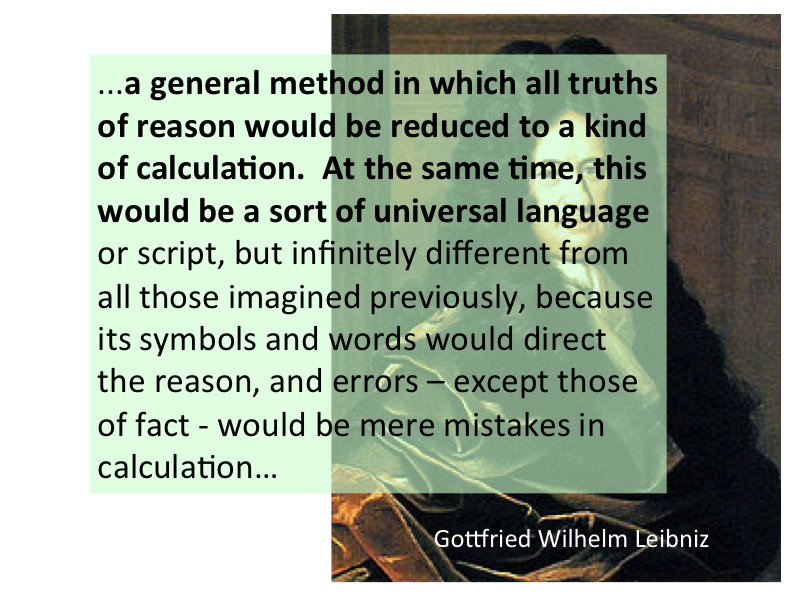

A few other computing machines were built around this time (e.g., Blaise Pascal's and Wilhelm Schickard's, but it was Gottfried Leibniz who made the huge intellectual and pragmatic leap to see the computing machines could be universal. |

|

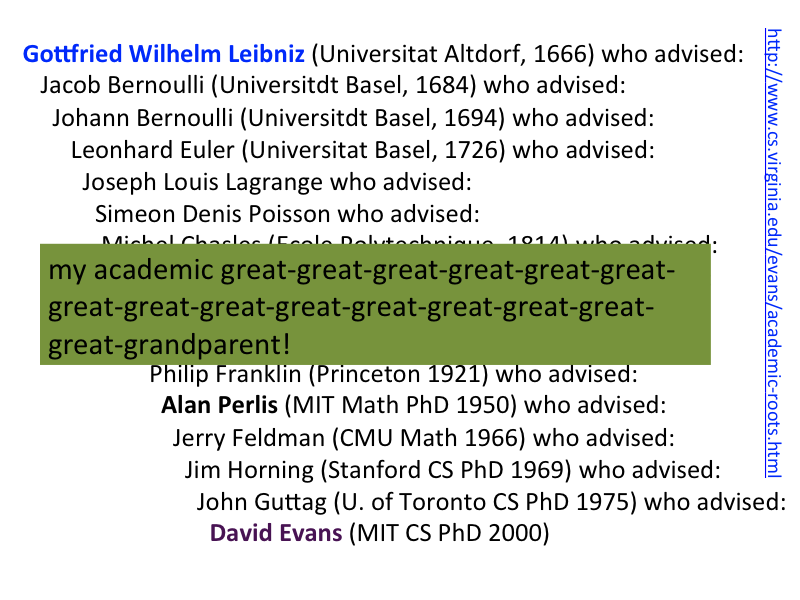

Although 1679 seems like a long time ago, its only 15 academic generations (which happen much more quickly than biological ones). I'm honored to have Leibniz as my academic great14-grandparent! |

|

At this point, we're intellectually 99.9999% of the way there to the first Android activation. (Okay, I'm counting getting from the origin of the universe to human language as about 99.99% of the way.) Actually achieving Leibniz' vision of universal computing machines, though, will take a few hundred more years of advances in physics, machinery, and mathematics. |

The Path to Practical Computers

Big engineering projects require big resources. War is a great way to advance technology, especially war that puts a cultures very survival at stake. Its too bad it requires so much death, suffering and destruction to provide the focus, motivation, and concerted resources to do really big things. (Some have argued more compellingly that the only real important development in human history was the invention of the steam engine and the industrialization it led to.)

|

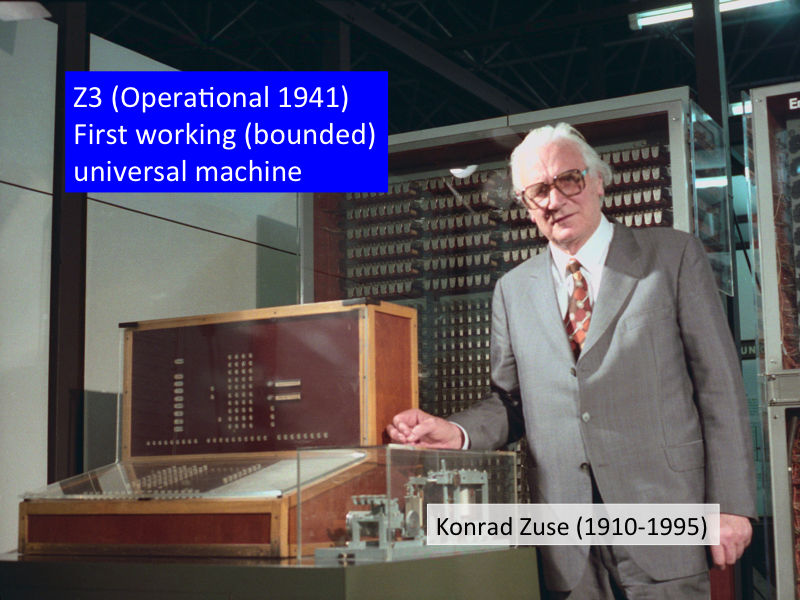

By 1941, Konrad Zuse had built a working "Turing-complete" computer. (Of course, we can't really talk about any physical machine as being truly universal, since its capabilities are limited by finite memory and operating time. But, extrapolating Zuse's design to a machine with unlimited memory and time, it would be a universal computing machine.) Zuse was not an overt Nazi, but was working in Berlin funded by the Nazi government. |

|

For context, this is what the world looked like in 1941. Other than that little spec of blue in the North Atlantic, pretty much the rest of the then-industrialized world was either controlled by the Axis, in a pact with them (Soviet Union), or at best neutral (USA). A few thousand people were working at a small manor and dozen hastily built huts at Bletchley Park, midway between Oxford and Cambridge, building machines that could do operations useful for cryptanalysis, but nothing close to the capabilities of the Z3. |

|

This is Bletchley Park, now a wonderful museum. See more pictures from my 2004 visit. |

|

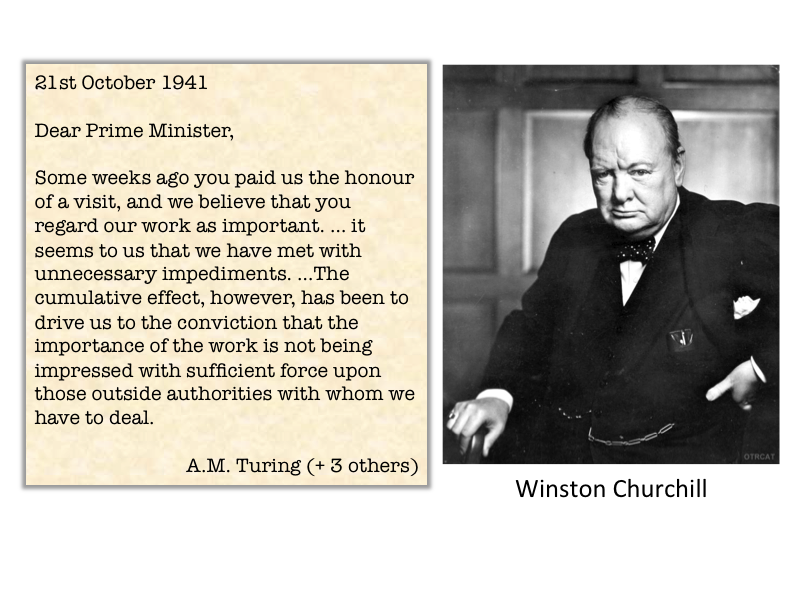

A group of four people working there, including most famously Alan Turing, circumvented the chain of command and wrote a letter directly to Prime Minister Churchill. The letter explained some of the impediments to their effective work and asked for help with resources (e.g., "We are intercepting quite a substantial proportion of wireless traffic in the Middle East which cannot be picked up by our intercepting stations here... Owing to shortage of trained typists, however, and the fatigue of our present decoding staff, we cannot get all this traffic decoded. This has been the state of affairs since May. Yet all that we need to put matters right is about twenty trained typists."). Anyone who wants to see how to write an effective funding proposal should stop now, and go read the entire two page letter. |

|

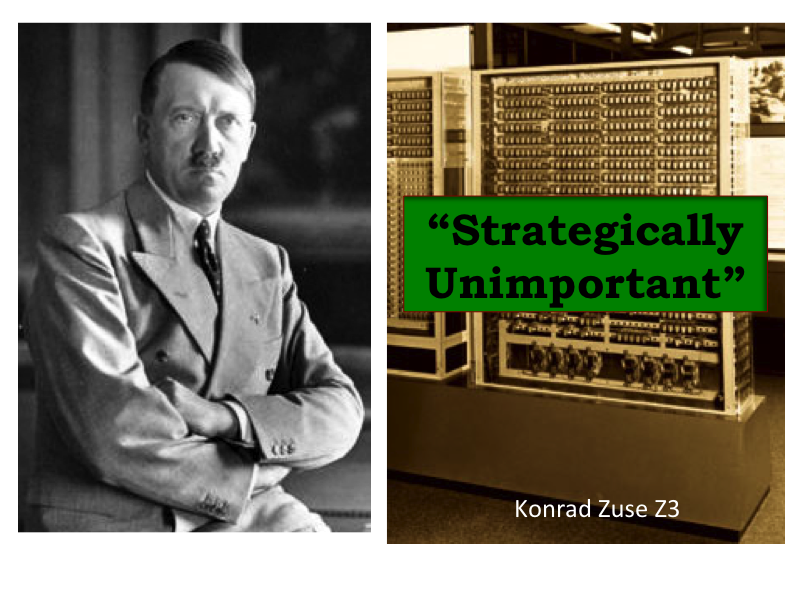

I'll keep you in suspense as to how Churchill responded to the letter (although I'm guessing even non-history majors among you have some idea how the overall story turns out!), to get back to Zuse's efforts in Germany. Requests for support to develop a successor to the Z3 were denied as "strategically unimportant". (Despite the picture on the slide, it is unlikely that these requests ever reached the level of the Führer.) |

|

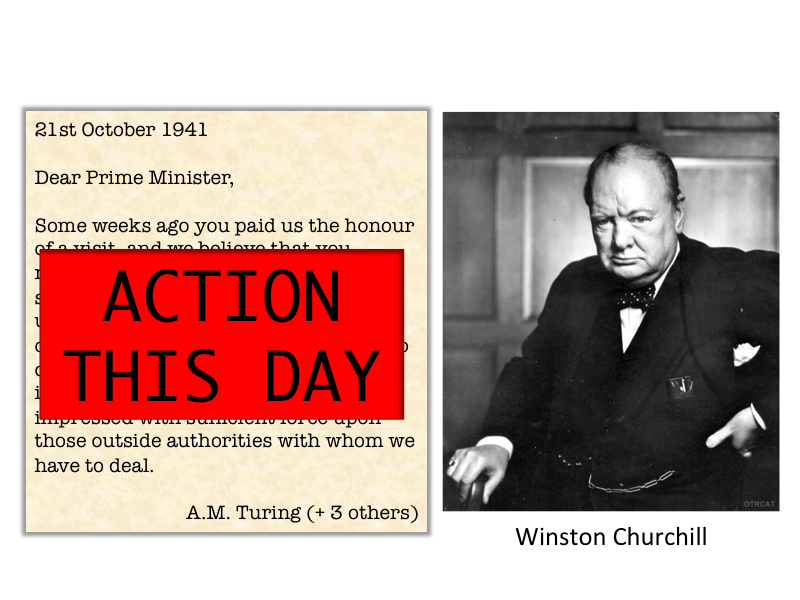

Churchill's response to Turing's letter: ACTION THIS DAY. Turing and colleagues got everything they needed. |

|

By 1943, Bletchly Park had built Colossus, a (somewhat) programmable computing machine used to break the Lorenz cipher the Nazi's used to communicate between their major conqured capitols across Europe. For more on the amazing story of Colossus, see this segment from my Applied Cryptography class. |

|

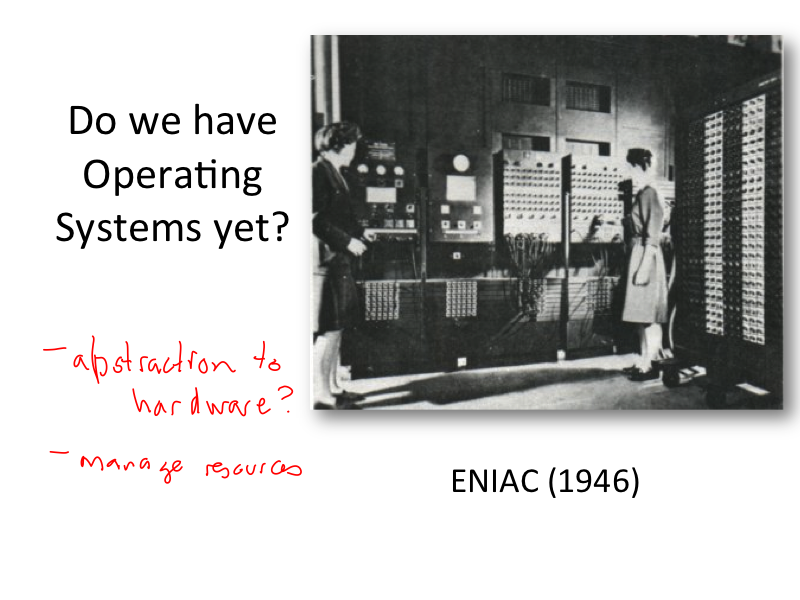

By the end of World War II, a number of programmable computing machines were under development, including the ENIAC, designed by John Mauchly and J. Presper Eckert at the University of Pennsylvania to calculate artillery tables for the US Army. |

The First Operating Systems

Although there are many possible definitions of an operating system, we defined an operating system (strictly) as a system that both (1) provides abstractions to hardware resources, and (2) manages how programs use those resources.

|

To decide if the ENIAC had an operating system, we need to understand how it was programmed. |

|

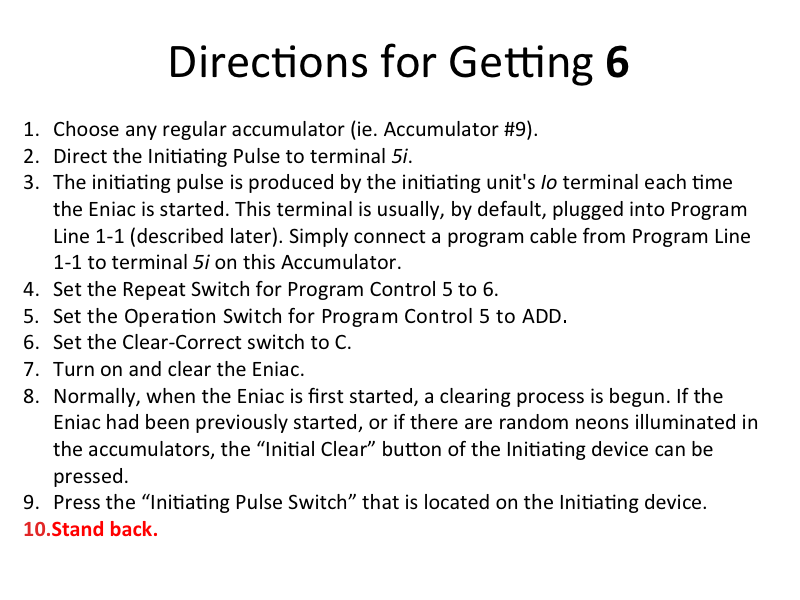

Here's how to assign the value 6 to a memory location in the ENIAC. Whether or not something provides an abstraction is a fairly fuzzy question, but you can be pretty confident that if programming the hardware requires the operator to stand back, it does not provide an abstraction to hardware resources. |

|

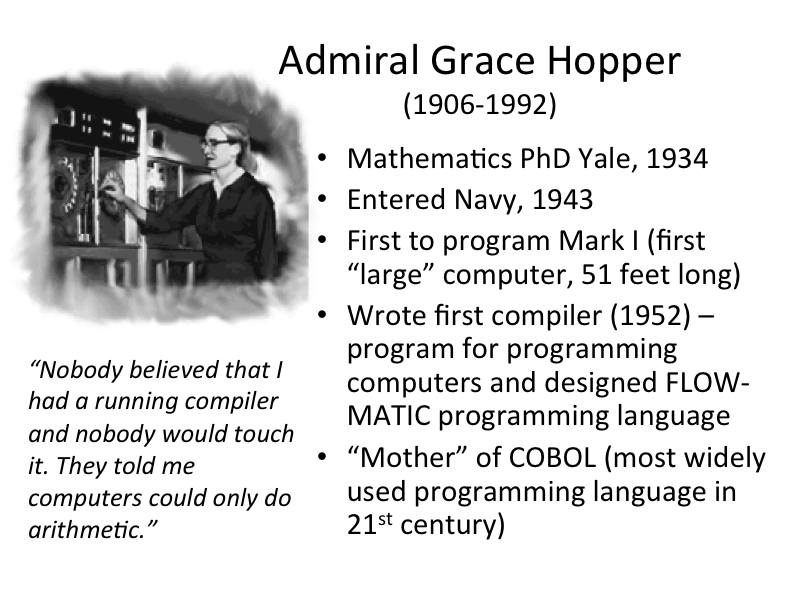

The "great Hop forward" in programming abstractions, was the idea that instead of controlling the hardware directly, we could write programs (that is, compilers) that produce the machine-level programs for us given programs written in a higher-level language. |

|

Admiral Grace Hopper was amazing. Check out her showing David Letterman what a nanosecond is. |

|

Definitely making progress, but not quite ready to activate Android. In particular, we don't yet have an operating system by our definition: need to manage resource use, not just provide abstractions. |

|

It took one more imminent threat to survival to accelerate the progress toward our first Android activation. |

|

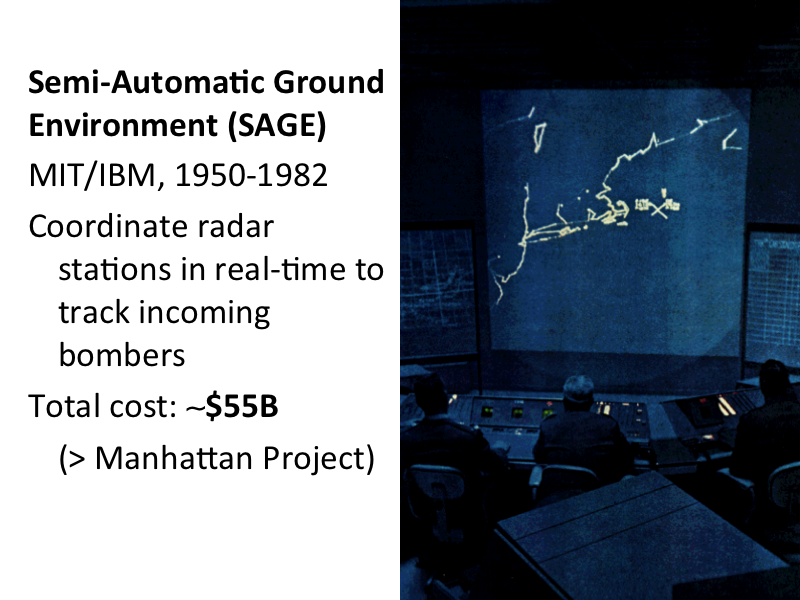

In the days before ICBMs, the military's main fear was that a single bomber could fly over the Arctic to reach a US city and obliterate it with an atomic bomb. Attempting to prevent this required radar far more sophisticated than anything developed during WWII, including the ability to track a single incoming bomber across multiple radar stations. This required really powerful computing, where speed was of the essence. |

|

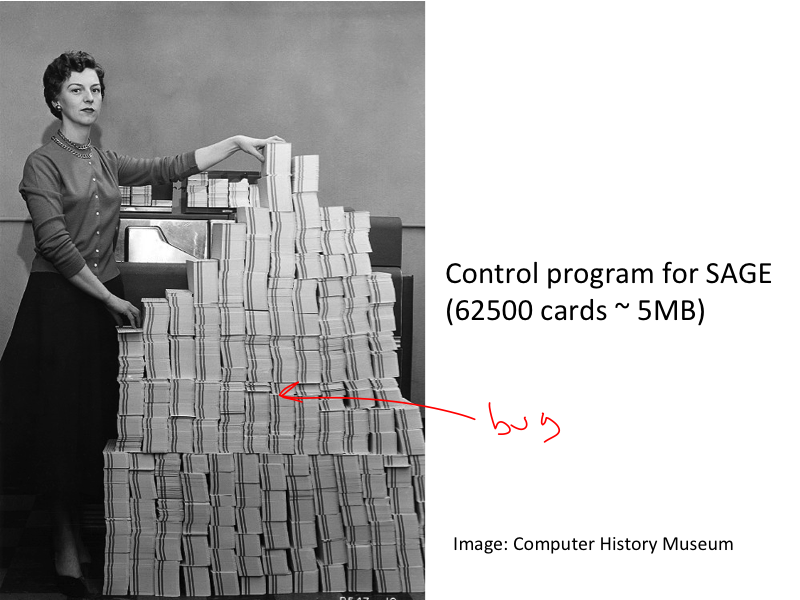

Here's the program for SAGE. Hopefully none of the cards have hanging chads. (For comparison, the 1.0 version of the Linux kernel was 4.7MB.) |

|

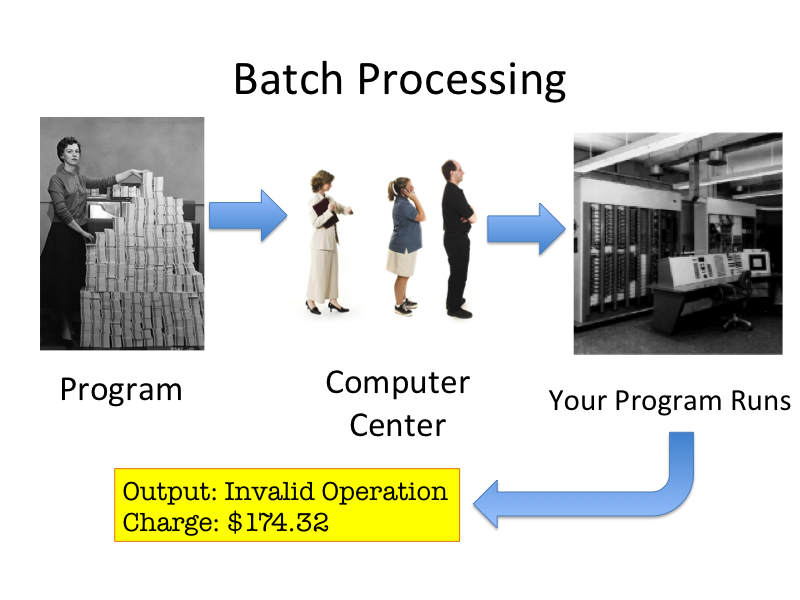

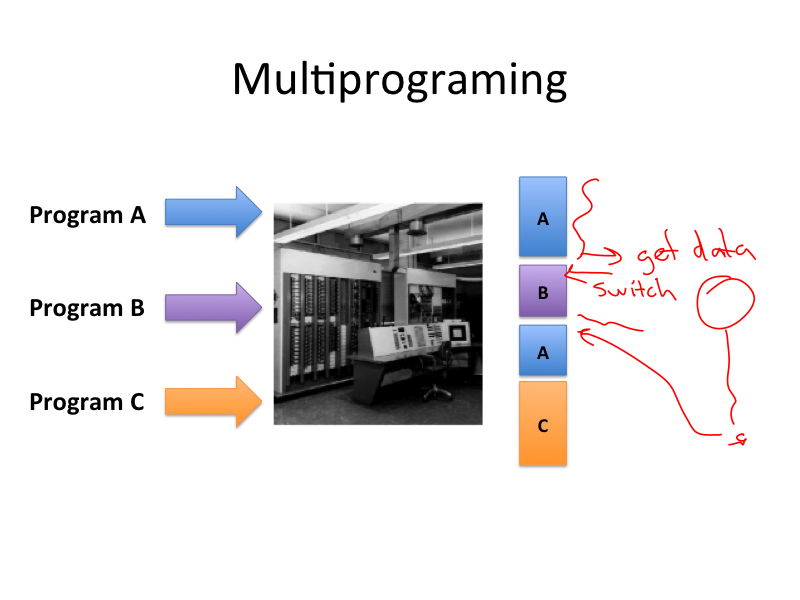

Batch processing runs one program at a time. An operator sets up the program in the computer and starts the program, which has full control over all the machine hardware until it finishes. The operator collects the result, and sets up the computer to run the next program. |

|

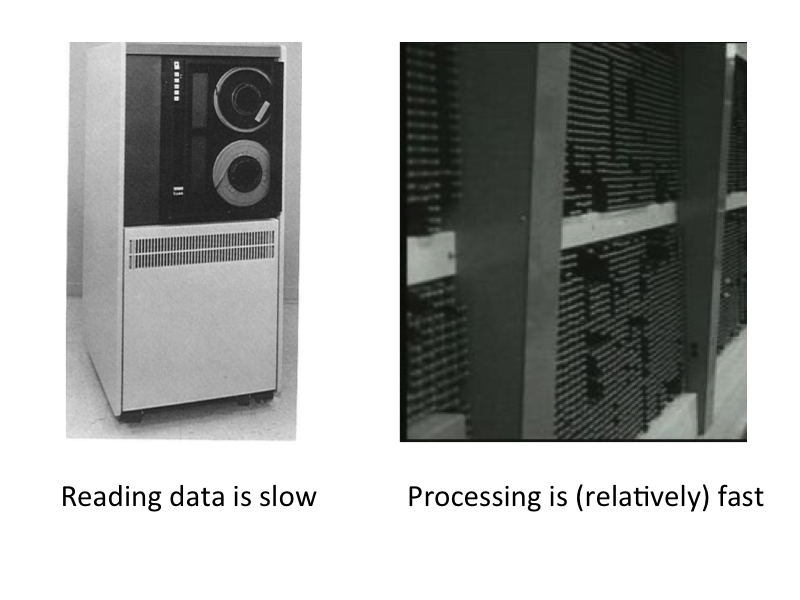

For the things people wanted to do with computers in the 1950s, batch processing really isn't as bad as it sounds. People were cheap, relative to the cost of the computer, so it wasn't a big deal to have lots of people wasting time. But computers were really really expensive! When the program needed to do something slow, like read some data from a tape, it was a huge waste of an expensive resource for the computer to be sitting around waiting. (Some people around this time were crazy enough to start thinking about interactive computing...but that's another story.) |

|

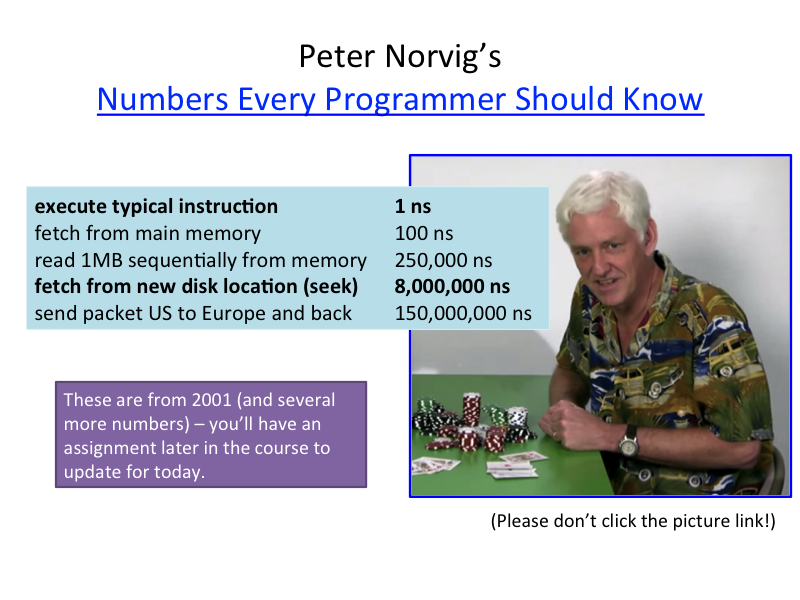

I'm not sure what the relative numbers were like for these machines, but probably not that different from what they are today. Getting data from slow memory isn't just a bit of a waste of the processor - each disk read is about 8 million wasted instructions: Peter Norvig's numbers every programmer should know. (These are from 2001, and selected from the full list. You'll come up with an updated list later in the course. Only a fool would go all-in against Peter Norvig, though.) |

|

So, we need a way to stop wasting all those valuable computers sitting around waiting for tapes to spin. The solution is to run another program instead of just waiting. |

Real Operating Systems

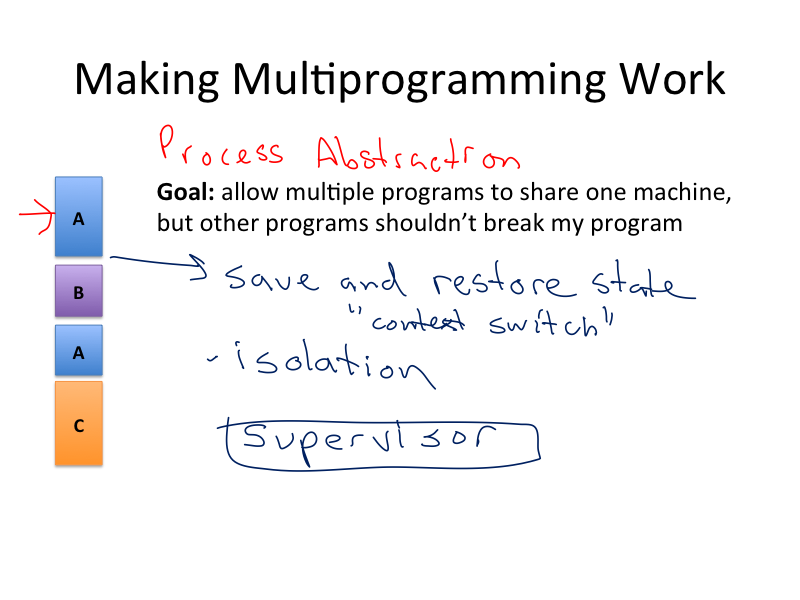

To do this we need a real operating system. We need a way to allow multiple programs to share one expensive computer, but provide each program with the process abstraction, that it owns the whole computer, and is running on it just like it would be if it were running as a batch process.

|

If we are going to run multiple programs on one computer without them interfering with each other, we need one special program that can control how the other programs run. This kernel has special access to the hardware and must be able to do some things the other programs cannot do. |

|

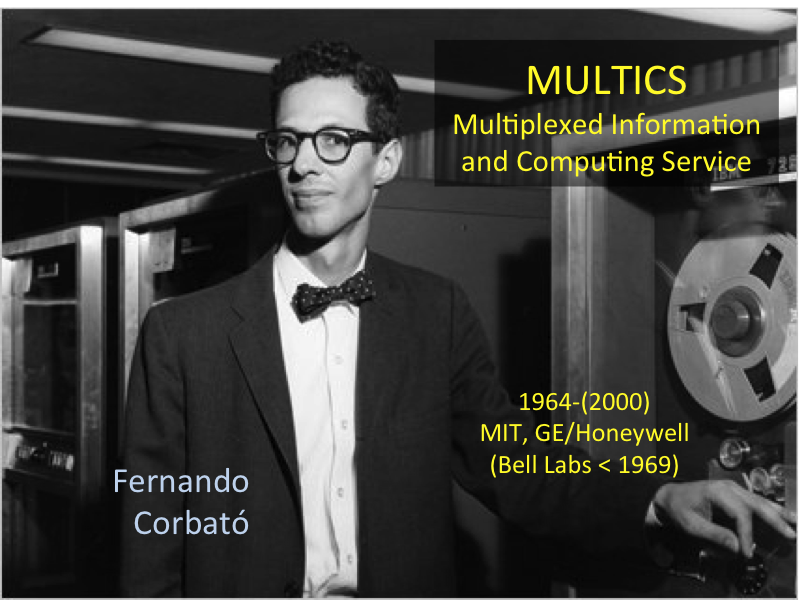

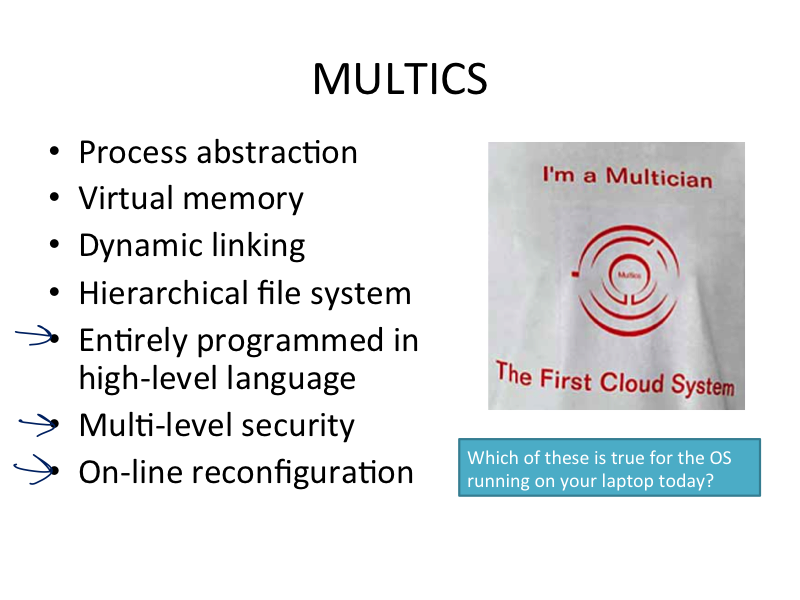

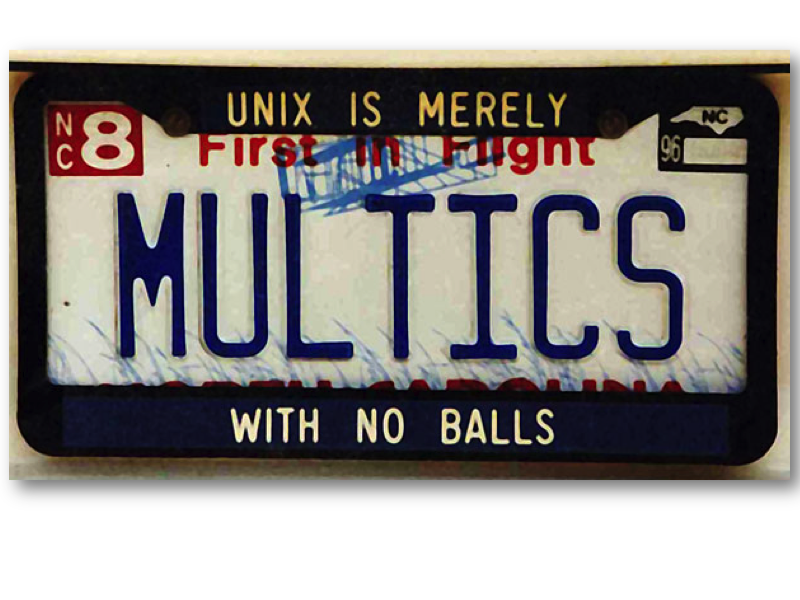

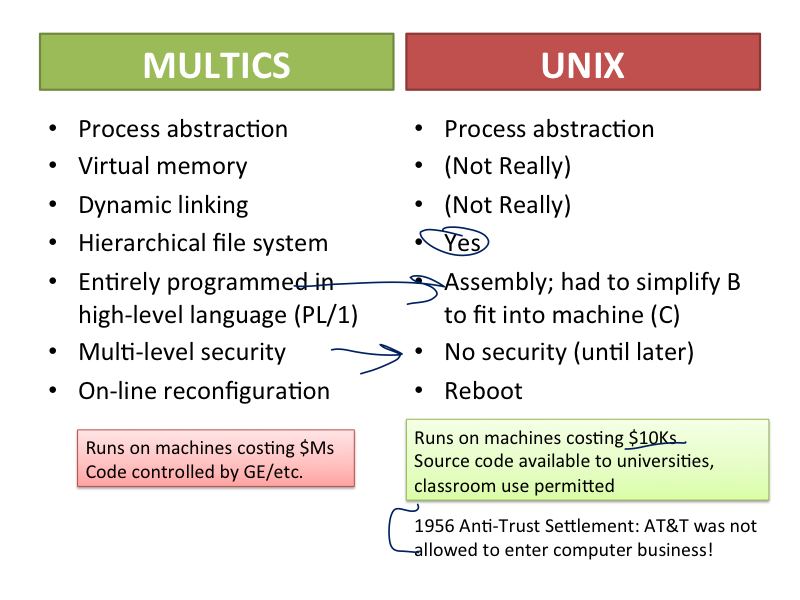

The first really significant operating system to provide a real process abstraction was MULTICS, developed starting in 1964 at MIT (with lots of partners). |

|

In many ways, MULTICS was arguably better than the operating system you are running today to view this page. |

|

So, why did it take forty more years before the first Android activation? |

Computing for Everyone

|

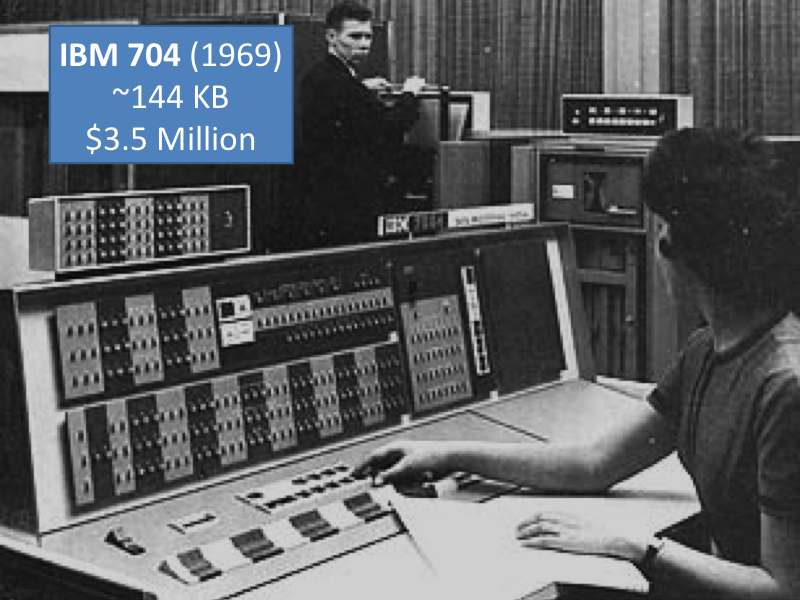

If you wanted to run MULTICS in 1969, you needed a truly astounding amount of memory! We're talking scores of kilobytes (nearly half as much memory as the picture of this slide!) In 1969, this cost about $3.5M (that was back when a million dollars was still a lot of money). |

|

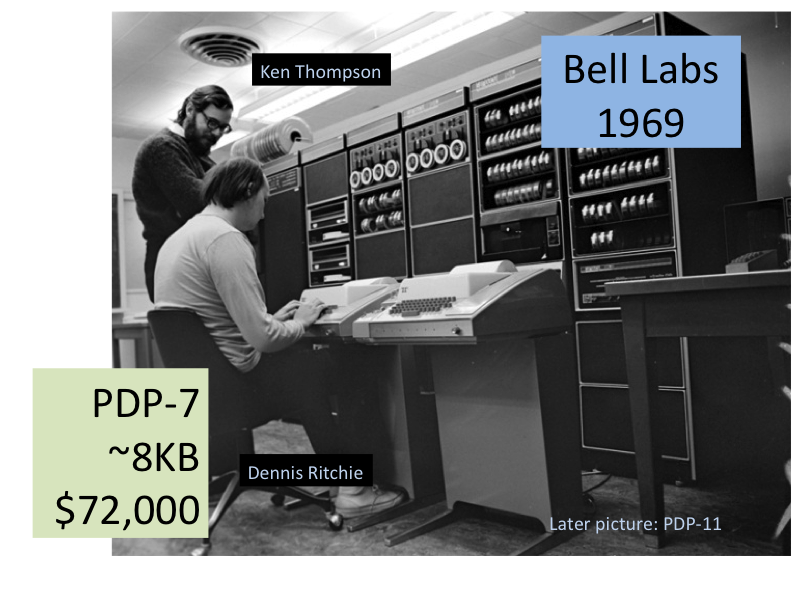

Smaller computers, though, were starting to get cheap enough that big enough companies with national monopolies could even afford to have ones just for engineers to play with. At Bell Labs, Dennis Ritchie and Ken Thompson got access to a PDP-7 and wanted to play a space game they had played on MULTICS (Bell Labs had been a partner for the MULTICS project from 1964-1969) on it. Unfortunately, it only had about 4K of memory, so there was no way to run any of the MULTICS code on it. |

|

So, they had to build something much simpler. "My guess is that some AT&T lawyers eventually decided that the punned name (Unics) did not reflect well on the corporate image, and insisted that it be changed it to Unix. But that's only a guess." - Peter Neumann |

|

Unix basically gave up almost everything good about MULTICS to fit into the available memory. They couldn't even fit the B language on it, so had to invent a simpler language, C, and built a compiler for it (saving space by doing things like changing the assignment operator from the sensible := to the mathematically confusing =, saving hundreds of bytes of storage at the bane of millions of future CS students). But, Unix could run on machines that organizations other than just the Department of Defense could afford! |

|

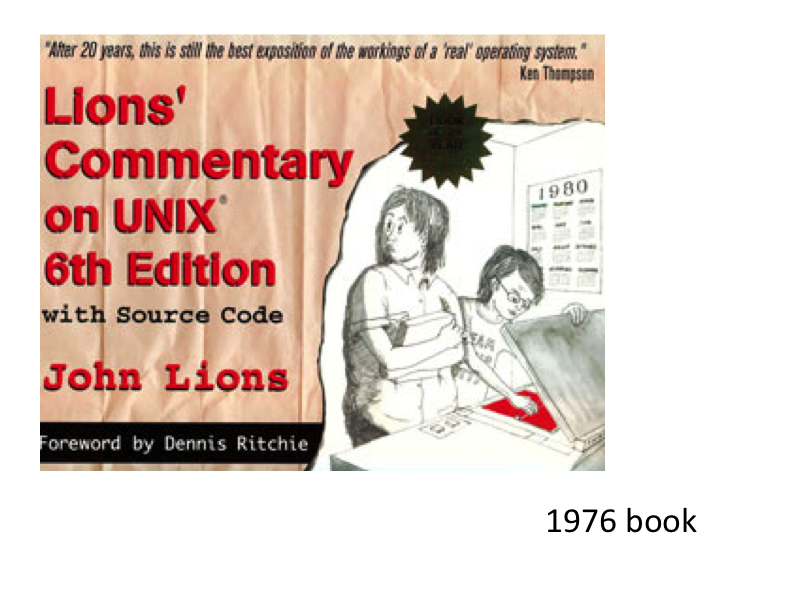

It was even better than that: AT&T was subject to a consent decree to resolve anti-trust issues with their telephony monopoly. They were barred from entering the computer market, and required the license anything they developed to anyone who wanted it. Unix spread like kitkats through academic computer science departments. John Lions, an Austrailian professor, wrote a book that explained the Unix source code. MIT's graduate operating systems engineering course still basically uses it today! |

|

By the end of the 1970s, AT&T's legal and business situation had changed, and they decided it was time to try and make some money off Unix. They disallowed using the Unix source code in classes, and blocked the use of Lions' book. |

|

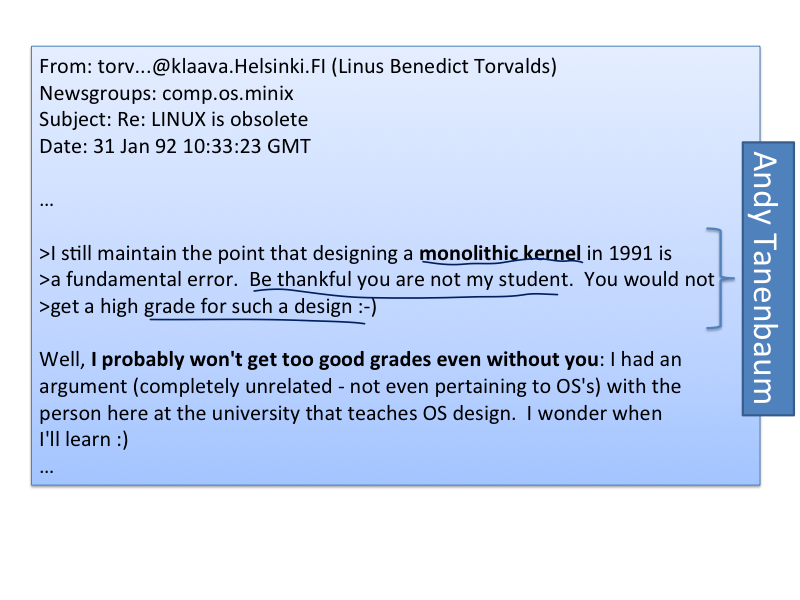

People still wanted to teach about operating systems by using actual implementations. Andrew Tanenbaum wrote a tiny operating system, MINIX, and a textbook including its (copyrighted) source code. |

|

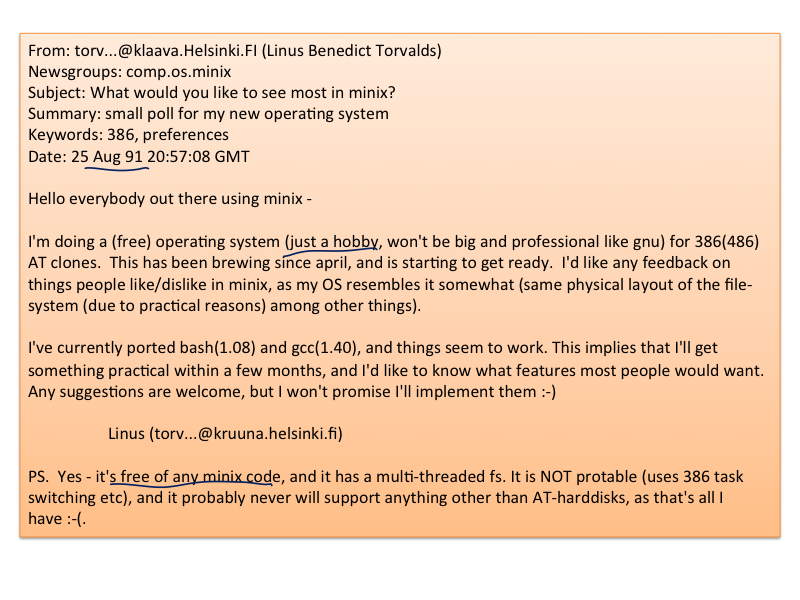

A few years later, a student in Finland got an IBM PC and decided to start writing a new OS as a hobby. He decided to avoid using any MINIX code, so his hobby system wouldn't be encumbered by any copyrights. |

|

Despite some flaws in his design, Linus persisted. We'll talk more about different OS designs in later classes, but the main issue is how much code should be in the kernel, running with the special privileges. Linux' monolithic kernel runs the entire operating system in supervisor mode, increasing the risk of bugs in code with supervisor privileges. |

|

Linux 1.0 was released in 1994 (176,000 lines of C code). It was licensed under an open source license that allows anyone to use and modify the code, including for commercial purposes, so long as they continue to distribute the modified source code. |

|

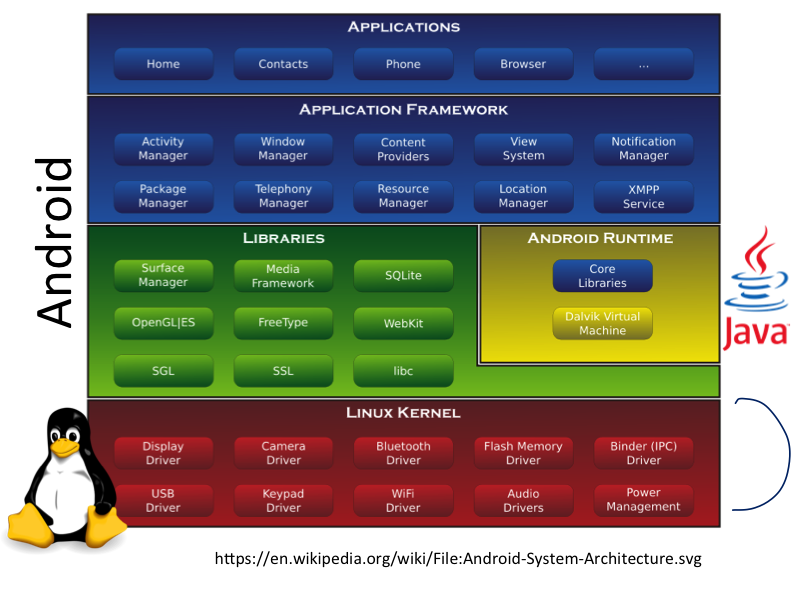

Many developers built systems based on the Linux kernel. Andy Rubin and colleagues started building an operating system targeted for mobile devices on the Linux kernel starting in 2003, with the goal of building a smartphone OS to compete with Symbian. Google acquired Android in 2005. |

|

Its been an amazing 13.8 Billion years! |

|

From nothing, to a world where an operating system can be on a billion devices in in five years, and a senator can play on-line poker instead of having to listen to boring discussions about whether or not to bomb other countries. |

|

Evolution is often mispresented as an inevitable path of progress leading to the ultimate goal of humanity. Lots of things about our current computing ecosystem seem inevitable and unchangable, but the actual story is much more meandering and serendipitious. Science and engineering play a large and important role, but so do politics, law, marketing, and individual acts of wisdom, kindness, and foolishness. |

The Moral?

War and scarity are powerful motivators, but in the long run, openness and altruism always win.

Its a really exciting time in computing! The next new platform to reach a billion devices should take much less than five years, and we probably haven't yet heard of it.

Action Items

Do at least three of these six choices by Monday:

- Vote in the poll about the playing poker in the Senate picture.

- Watch Bret Victor's The Future of Programming (talk at DBX conference, 30 July 2013)

- Watch Grace Hopper on the David Letterman show (2 October 1986)

- Read Fernando J. Corbató's Turing Award Talk, On Building Systems that Will Fail

- Read some Multicians' stories

- Watch Andrew McAfee's talk on Are Droids Taking our jobs? (its really about the big history of human innovation and progress)

Assignments due: Problem Set 1 is due on Tuesday, September 10 (11:59pm).