The myth of “enhance this image.”

Arthur C. Clarke’s third law states

Any sufficiently advanced technology is indistinguishable from magic.

It turns out the technology doesn’t actually need to be advanced, it just needs to be unknown to the audience. And, due to the persistence with which computing isn’t taught, computing is sufficiently unknown to most to be the “magic” in the motion-picture entertainment of the day.

One of the standard “spells” in media today is the image enhancer. There’s a low-resolution image of something; a computer “enhances” it and it gradually becomes higher resolution.

No such spell exists.

The idea behind image enhancing probably came from the early days of the Internet when progressively-encoded JPEGs were popular. The idea behind this encoding was that, since it took non-trivial time to download each image, you’d make the first bit of data meaningful. So instead of sending the pixels one row at a time:

| 1 | 2 | 3 | 4 | 5 | 6 |

| 7 | 8 | 9 | 10 | 11 | 12 |

| 13 | 14 | 15 | 16 | 17 | 18 |

| 19 | 20 | 21 | 22 | 23 | 24 |

| 25 | 26 | 27 | 28 | 29 | 30 |

| 31 | 32 | 33 | 34 | 35 | 36 |

you’d put a scattering of pixels from throughout the image first, then the in-between pixels later:

| 1 | 10 | 5 | 11 | 2 | 12 |

| 13 | 14 | 15 | 16 | 17 | 18 |

| 6 | 19 | 7 | 20 | 8 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 |

| 3 | 28 | 9 | 29 | 4 | 30 |

| 31 | 32 | 33 | 34 | 35 | 36 |

That way, you quickly get a rough low-resolution version of the whole picture and the details fill in as more information is downloaded. Actually JPEGs work a little differently since they send the information needed to reconstruct pixels instead of pixels themselves, but the principle is the same.

The important thing to notice about progressively-encoded images is that the finer details are filled in as more information is received. The problem with trying to write a program that adds information to an existing image is there is not influx of more information. Computing can make dim things brighter, can detect and remove various kinds of patterns that might distract the eye, and so on; but it cannot add information where none exists. A low-resolution image is always low-resolution.

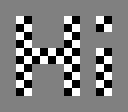

As an example of why information can’t be reconstructed, consider the following low-resolution image.

I made that image by taking this image:

and reducing the resolution to half. The problem is, when I turn four pixels into one I end up with grey whether there were half black and half white pixels or there were just grey pixels to begin with. The original detail is lost. I don’t care how smart your computer is, it isn’t going to figure out that there used to be text in the grey square.

To make matters worse, low-resolution images can actually contain information that was not present in the original. If, for example, some of the groups of four pixels I turn into one had three greys and a white, we’d get this:

Not only is the original text no longer visible, but something else is visible instead, something that wasn’t in the original. This phenomenon is called “aliasing” and avoiding it is a major concern in computer graphics.

Looking for comments…