Table of Contents

ZFS

All of our network storage is now hosted on ZFS filesystems. We have four physical ZFS servers zfs1, zfs2, and zfs3. There is an additional DNS CNAME for zfs4, however this simply points to zfs1. These servers run SPARC Solaris 11.3 and act as head nodes in front of our SAN Storage. The SAN is primarily made up of Nexsan storage appliances which include the E48 series and SATABeast disk arrays.

We have also deployed two new Linux based ZFS file servers, named corezfs01/02. These serve the new user and project filesystems (see below), and are physically housed on a Hitachi G200 disk array.

You can find a listing of all ZFS Pools and Datasets here.

Pools

Our disk storage is broken up into storage volumes by the disk arrays. These volumes are then added to ZFS pools on the ZFS servers. The pools that were created on the new system all start with zf. We have 25 zf partitions, zf1-zf25. There are several legacy pools that were imported or migrated to ZFS, but still start with af, if or uf.

We can see a listing of pools on a server by running zpool list

root@zfs2:~# zpool list NAME SIZE ALLOC FREE CAP DEDUP HEALTH ALTROOT if1.tank 5.96T 2.98T 2.98T 49% 1.00x ONLINE - if2.tank 5.96T 4.00T 1.96T 67% 1.00x ONLINE - if3.tank 5.96T 2.04T 3.92T 34% 1.00x ONLINE - if4.tank 5.96T 530G 5.44T 8% 1.00x ONLINE - if5.tank 5.96T 2.77T 3.19T 46% 1.00x ONLINE - if6.tank 5.96T 4.47T 1.49T 74% 1.00x ONLINE - rpool 136G 19.6G 116G 14% 1.00x ONLINE - zf10.tank 5.97T 2.50T 3.47T 41% 1.00x ONLINE - zf11.tank 5.97T 11.1G 5.96T 0% 1.00x ONLINE - zf12.tank 5.97T 587G 5.40T 9% 1.00x ONLINE - zf7.tank 5.97T 1.03T 4.94T 17% 1.00x ONLINE - zf8.tank 5.97T 5.11T 878G 85% 1.00x ONLINE - zf9.tank 5.97T 1.13T 4.84T 18% 1.00x ONLINE -

Importing and Exporting zpools

If a server has issues with a pool, sometimes you will find that the pool is no longer imported. If you do not see a pool that should be on a server then you may need to re-import the pool. Running zpool import will show you a list of all pools on the SAN that the server can see. We can import a pool by running zpool import poolname.

root@zfs2:~# zpool import

pool: af4

id: 13970819169355586171

state: ONLINE

status: The pool was last accessed by another system.

action: The pool can be imported using its name or numeric identifier and

the '-f' flag.

see: http://support.oracle.com/msg/ZFS-8000-EY

config:

af4 ONLINE

c0t6000402001E016FE7C91F4EB00000000d0s1 ONLINE

pool: af12.tank

id: 11343109093909297116

state: ONLINE

status: The pool was last accessed by another system.

action: The pool can be imported using its name or numeric identifier and

the '-f' flag.

see: http://support.oracle.com/msg/ZFS-8000-EY

config:

af12.tank ONLINE

c0t6000402001E016FE6BACBE7300000000d0s1 ONLINE

...

root@zfs2:~# zpool import poolname.tank

We need to be careful because this also shows pools that are actively imported and in use by other servers! You should be able to import the pool without using the '-f' argument to force, however you may need to force the import under certain circumstances (such as if there are errors or faults).

Datasets

These pools house ZFS datasets, which we ultimately refer to as partitions. For example the pool zf15-18.tank on zfs3 contains two datasets, zf15-18.tank/zf15 and zf15-18.tank/zf18. These datasets are mounted on the ZFS servers as local filesystems and are then shared to the network via NFS and Samba.

Network Filesystems

ZFS has built in integration with network filesystem protocols. We can see a listing of share by running:

root@zfs2:~# share IPC$ smb - Remote IPC if1.tank/if1 /if1 nfs sec=sys,root=argo.cs.virginia.edu:ares.cs.virginia.edu:athena.cs.virginia.edu:zfs1.cs.virginia.edu:zfs2.cs.virginia.edu:zfs3.cs.virginia.edu:coresrv01.cs.virginia.edu:coresrv02.cs.virginia.edu if1_smb /if1 smb - if2.tank/if2 /if2 nfs sec=sys,root=argo.cs.virginia.edu:ares.cs.virginia.edu:athena.cs.virginia.edu:zfs1.cs.virginia.edu:zfs2.cs.virginia.edu:zfs3.cs.virginia.edu:coresrv01.cs.virginia.edu:coresrv02.cs.virginia.edu if2_smb /if2 smb - if3.tank/if3 /if3 nfs sec=sys,root=argo.cs.virginia.edu:ares.cs.virginia.edu:athena.cs.virginia.edu:zfs1.cs.virginia.edu:zfs2.cs.virginia.edu:zfs3.cs.virginia.edu:coresrv01.cs.virginia.edu:coresrv02.cs.virginia.edu if3_smb /if3 smb - if4.tank/if4 /if4 nfs sec=sys,root=argo.cs.virginia.edu:ares.cs.virginia.edu:athena.cs.virginia.edu:zfs1.cs.virginia.edu:zfs2.cs.virginia.edu:zfs3.cs.virginia.edu:coresrv01.cs.virginia.edu:coresrv02.cs.virginia.edu if4_smb /if4 smb - ... zf9.tank_zf9 /zf9 nfs sec=sys,root=argo.cs.virginia.edu:ares.cs.virginia.edu:athena.cs.virginia.edu:zfs1.cs.virginia.edu:zfs2.cs.virginia.edu:zfs3.cs.virginia.edu zf9.tank_zf9 /zf9 smb - zf9_smb /zf9 smb - c$ /var/smb/cvol smb - Default Share

Sometimes if there are issues at boot time (eg a pool is faulted) Solaris will put NFS and SMB into maintenance mode and no shares will be active. If this happens you will get no output from running share. This can sometimes be fixed by running share -a. The output of dmesg is often useful for troubleshooting NFS/SMB issues.

NFS

In Solaris 11 NFS is handled by SMF (Service Management Facility. There are several NFS related services

root@zfs2:~# svcs | grep nfs online Nov_20 svc:/network/nfs/fedfs-client:default online Nov_20 svc:/network/nfs/status:default online Nov_20 svc:/network/nfs/mapid:default online Nov_20 svc:/network/nfs/rquota:default online Nov_20 svc:/network/nfs/nlockmgr:default online Nov_20 svc:/network/nfs/server:default

You can restart the main NFS server by running

root@zfs2:~# svcadm restart nfs/server

Service scripts and log files can be found in /var/svc/manifest and /var/svc/log respectively.

SMB

Samba is also controlled through SMF, however there are elements outside of these services that are important (such as kerberos and Active Directory).

To restart the main smb service

root@zfs2:~# svcadm restart svc:/network/smb/server

We have had several issues with samba and Active Directory that have broken samba shares. To test the SMB server from the ZFS server, run the following command

root@zfs1:~# smbadm show-shares -A localhost c$ Default Share IPC$ Remote IPC zf1.tank_zf1 zf1_smb zf2.tank_zf2 zf20_smb zf21_smb zf22_smb zf23_smb zf24_smb zf25_smb zf2_smb zf3_smb zf4.tank_zf4 zf4_smb zf5.tank_zf5 zf5_smb zf6.tank_zf6 18 shares (total=18, read=18)

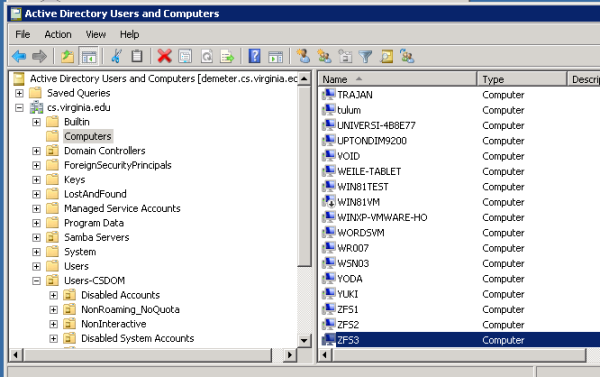

You should see a listing of all samba shares. If you see no output then something is wrong and most likely it's related to Active Directory. Fixing this issue involves un-joining and re-joining the domain. Start by deleting the computer object in AD. Log on to one of the AD servers, search for the ZFS server under Active Directory Users and Computers→Computers. Right click the computer object (eg ZFS2) and select “delete”

Before joining the domain, we must make sure that one of the Active Directory servers is the first entry in /etc/resolv.conf as a nameserver:

root@zfs2:~# cat /etc/resolv.conf search cs.virginia.edu nameserver 128.143.67.108 <- Demeters IP address nameserver 128.143.136.15

Then run the following and follow the prompts to re-join the domain

root@zfs2:~# smbadm join -u administrator cs.virginia.edu After joining cs.virginia.edu the smb service will be restarted automatically. Would you like to continue? [no]: yes Enter domain password: Locating DC in cs.virginia.edu ... this may take a minute ... Joining cs.virginia.edu ... this may take a minute ... Successfully joined cs.virginia.edu

Snapshots

See the main article on Backups for more information.

Snapshots are automatically taken every 12 hours at 6am and 6pm. We have no mechanism in place to monitor utilization of our partitions, because of this partitions will occasionally fill up because of snapshots. Follow these steps to free up space by deleting old snapshots

First get a list of snapshots on the partition on which you want to free up space

root@zfs3:~# zfs list -r -t snap zf15-18.tank/zf15 NAME USED AVAIL REFER MOUNTPOINT zf15-18.tank/zf15@12.06.17-00 544M - 3.94T - zf15-18.tank/zf15@12.06.17-06 538M - 3.94T - zf15-18.tank/zf15@12.06.17-12 5.28G - 3.95T - zf15-18.tank/zf15@12.06.17-18 89.3M - 3.95T - zf15-18.tank/zf15@12.07.17-00 67.4M - 3.95T - zf15-18.tank/zf15@12.07.17-06 56.7M - 3.95T - zf15-18.tank/zf15@12.07.17-12 116M - 3.96T - zf15-18.tank/zf15@12.07.17-18 87.2M - 3.96T - ...

Say we want to delete the oldest 5 snapshots, we can pipe the output of this into head -6 to take the top 6 lines, and then pipe that into tail -5 to remove the top line that we don't want

root@zfs3:~# zfs list -r -t snap zf15-18.tank/zf15 | head -6 | tail -5 zf15-18.tank/zf15@12.06.17-00 544M - 3.94T - zf15-18.tank/zf15@12.06.17-06 538M - 3.94T - zf15-18.tank/zf15@12.06.17-12 5.28G - 3.95T - zf15-18.tank/zf15@12.06.17-18 89.3M - 3.95T - zf15-18.tank/zf15@12.07.17-00 67.4M - 3.95T -

We then use awk to get only the first column (the snapshot names). Pipe this into a temporary file and run the following loop to delete the snapshots.

root@zfs3:~# zfs list -r -t snap zf15-18.tank/zf15 | head -6 | tail -5 | awk '{print $1}' > /tmp/zf15.snaps

root@zfs3:~# for i in `cat /tmp/zf15.snaps `; do zfs destroy $i; done

CoreZFS servers

zpool persistence

After installing the ZFS On Linux packages on CentOS, we have several ZFS related systemd services

[root@corezfs01 ~]# systemctl list-unit-files | grep zfs zfs-import-cache.service enabled zfs-import-scan.service disabled zfs-mount.service enabled zfs-share.service enabled zfs-zed.service enabled zfs-import.target enabled zfs.target enabled

In the default state of these ZFS services after installation, any zpools created on the system will disappear once the system reboots. There is a fix for this behavior, but I have not found the best way to perform this action using Puppet. For the meantime, we need to run the following command on new ZFS servers:

[root@corezfs01 ~]# systemctl preset zfs-import-cache zfs-import-scan zfs-mount zfs-share zfs-zed zfs.target

ZFS quotas

Each ZFS dataset has a disk space quota set. To see the quotas, login to the zfs server and use the zfs command:

[root@corezfs01 ~]# zfs get refquota,quota u/av6ds NAME PROPERTY VALUE SOURCE u/av6ds refquota 19G local u/av6ds quota 20G local

The refquota is the disk space utilization at which the user will get a warning, but still be able to remove files. The quota is a hard quota that cannot be exceeded, and the user is locked out from being able to act on files. We only set the refquota. Do not set the hard quota! To set a quota, use the zfs set command:

[root@corezfs01 ~]# zfs set refquota=29g u/av6ds [root@corezfs01 ~]# zfs get refquota u/av6ds NAME PROPERTY VALUE SOURCE u/av6ds refquota 29G local