- We want to allow data-entry of organizations

- Add descriptions to the organization table

- Need hierarchical organizations

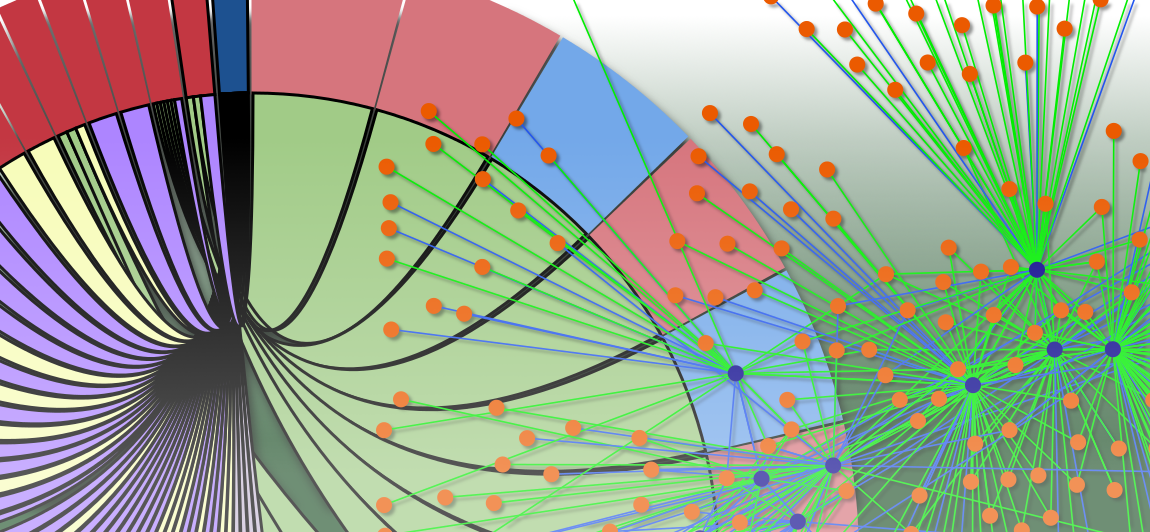

- Interesting visualizations (Doug’ll be doing this)

- Distributions: age of wives at time of marriage (for one man, for a group)

- Average number of wives for polygamous marriages

- How many single males adopted were brothers of one of the wives

- How many in a family unit came from the same region

- What was the offices of these plural root marriage husbands

- Same dates I had used for the graphs (these might be interesting research questions for me)

- As the man’s aging, what do the distributions look like? How do the distributions for each smaller period look in comparison to the other two?

- So, distributions of wives’ ages for all time at each of these points in time

- Distributions of wives’ ages for only the wives added in that smaller time interval (comparison between marriages that happen in the various intervals)

- How long have the first two people been married before the third person is brought in the marriage?

- Research Question

- Identity function over time separate from identity function that gives a different view of the graph (lens function)

- Identity function for a specific metric over a graph. For example, for connectedness, an identity function that says when a marriage gets to a point where it has over 7 participating people, it is treated in a different manner (its identity changes, it’s discounted in the metric or treated differently in the computation of the measure at that point in time).

Important

- Should we be sending an abstract to DHCS2015

Notes

- Thesis

- Annunciate a question of what your workplan will address.

- Will the measures we define have efficient…?

- What I do will provide me this type of answer (to a larger question)

- Need questions that have multiple possible answers

- What are the most important contributions of the dissertation? They will come out of answering the thesis question(s).

- Don’t mention datasets and types in the thesis statement

- 2-3 sentences tops (maybe 5-6 lines on a page)

- Make the thesis as crystalline as possible:

- Succinct (and dense)

- Has a good ring to it (to me)

- Address dynamics in the proposal. It’s not crucial in the thesis statement, but it should be in the proposal.

Notes from Jason

- Humanities perspective and a technical perspective

- Wanting to bring the linked database (connecting multiple databases together)

- Streamline the Marriage stuff..

- Line out the humanities research question and what are your technical research questions. What are the exact things you need the grant to do?

- Productive way of combining the databases to answer the questions we want to answer, then we’d be in a position to show how the smaller (joint-db) part fits into the bigger research questions with the marriage project.

- Practice of polygamy and its origins, and scholarly history

- One component of that project is this piece…

- Need some sense of what this metadata looks like and there are ways people have gotten around crosswalking data

- Want the reviewers to be captivated by:

- Humanities interesting history stuff

- Very pragmatic nature of the need (ex: differing datasets and it’s hard to coordinate these together and this will help)

- Should include both the humanities and technical aspects of the previous works

- Talk about doing: interface, schema to join the desparate datasets,

- This is allowed and encouraged to be experimental (ex: We think this will be better, and here’s how we’re going to design and test)

- Stage out the workplan so that they can see how you’re putting it together and going to move forward (piece by piece)

- They streamlined and expanded what’s allowed. Removed a lot of emphasis on innovation, but added a framework and require a way to dissemenate the knowledge learned (but any are allowed as outcomes, however you feel is best to dissemenate that knowledge–scripts, samples, data, schema, etc).

- No expectation (at least not for 40-70k), no one expects you to have formal polished tool or anything. Have a realistic set of expectations as to what the outputs are

- “Here’s how we better understand the relationships between these partners…”

- “This is how one dataset represents a relationship, and here’s how another one does it.” Go on to understand how they are different and linked better, and we can then better understand how those relationships are detailed.

- People are interested in interesting arguments and details

- Just need to get into enough detail so that people get captivated by the details but not caught up or tripping over or overwhelmed by the details.

- This kind of data curation component is the least sexy thing of this kind of scholarship, but it’s the most important. This is a common problem that’s rarely addressed but commonly raised.

- Need environmental scan (what’s been currently done and what’s out there)

What Luther Suggests

- Prototype (initial)

- Match table

- (DB, table, row) matches to (DB, table, row), metadata: who said they were the same, when, and notes about the match

- Need to know how to identify a thing in a generalizable form (db entry, xml entry, XLS entry, etc)

- Lots if idiosyncratic things

- Need somewhere to store and host the database (backend)

- API

- Into the new database

- Into all the other databases that are linked from this one

- User Interface (to the back end)

- Edit and query interfaces

- Good query language (cross-database) for humanities researchers

- Where to go from here:

- Populate

- Programmatic, human, from logs

- We have the BYU to UVA connector

- Cross-database stats and visualizations

- Consistency checks

- Do all the sources and matches actually agree?

- Do all the assertions check out

To Do

- Look for notes from the twitter / gnip presentation. I don’t think I have any, though.

- Look up the gamma probability distribution function (used in the gnip presentation)

- What happens with closeness/harmonic centrality when there are multiple disjoint cycles? What if the cycles are of the same size?

- Look up the proposal format the department requires (grad handbook). Length is 15 pages?

- We want to be characterizing the shapes of these graphs/distributions, too. Not just explaining what happens at one point in time, but what does the change look like over time?

- Aside: if for betweenness centrality, if at time it’s 0, we can tell if it’s disjointed, a cycle, or a clique by counting the edges up to , since if there are none it’s disjoint, if there are it’s a cycle, and if there are more than it’s a clique.

- Possible measure that could be interesting to someone: how many nodes have paths of length in time?

- A possible extension moving forward: use the full and compute the measure over the entire evolving network to get a ground truth value, then

- can we use our measures to predict what would happen? So, take a shorter subset of and compute the measure. Can we predict what will happen after that subset? Does it match what we measured for all of ?

- This could be applicaple in streaming graphs

- Develop and write the thesis statement

Notes

- Thesis statement notes

- Be careful with the wording, to make sure you’re not promising too much or too little, and to make sure it is research

- It could be “There are 4 different measures that can be made over evolving networks,” however, there is no research in this statement

- There could be a notion that they mean something, but that’s tricky to state

- Could have clauses dealing with the efficiency of calculation (usually a relative measure, such as more efficient than brute force), but these should be left for the body of the text and not in the thesis, since they are hard to guarantee success.

- Open question that could come up in the presentation and needs to be addressed:

- Are you going to improve it? (the current efficiency / calculation)

- Are you going to prove a lower bound?

- Is your improvement significant in terms of research?

- We should not say anywhere: we fail in the dissertation if we can’t prove a lower bound. That is bad.

- We should specifically state what we’re going to do. Ex: We’re going to do this and that, and if we end up with a proof of lower bound, then that’s awesome and great, but extra

- It needs to be more than: definition, coding and implementation

- What’s the research question there?

- It needs to be something that can fail. If it can’t fail, then it’s nothing more than development. It’s NOT research

- The thesis statement needs to be worded in a way that’s meaningful to me

- When I later need to say, “should I be looking at this, or that?” the thesis statement should be my guide:

- do what’s most relevant to the thesis statement

- If not, It might be that I need to update the thesis statement (which changes the direction of the project). In this case, I should take it to the committee and see what they say and if there are any red flags that are obvious to them.

- Include: Extensions to evolving networks

- They allow us to capture things in our motivating applications that aren’t able to be captured in other current evolving networks

- Node-identity definitions and repercussions

- The extensions we defined are important to allow access to ___ that are not accessible from current work

- Include?: Measures about the graphs that will have relation to semantics of our motivating examples. This is tricky

- How to know when we succeed?

- Collaborator says it’s properly captured. This is NOT a good research plan, so we need a variation of this

- Compare to already agreed upon ways of capturing these changes and semantics. That is, “we capture at least as well as this other method” and we’re faster, quicker, more efficient, or better in some way

- We might not have these comparisons to current state of the art / agreed upon ways since we’re doing new things, like the Mormon marriages

- Might need to build up a straw man argument in the text to show taht we can’t do this.

- We don’t have the ability to do this, so say we’re not going to do that (“Usually people will compare this to other agreed upon way, but there aren’t agreed upon ways to do that, so we’re going to approximate it by…?”)

- Thesis statement (possibility):

- Evolving networks

- extensions

- metrics over those extensions

- motivating examples

- Note, from Worthy: This is still my dissertation. Don’t try to make it (or figure out) what Worthy’s saying. Make it your own! What’s of interest to you?

- Comments to look for from committee:

- This is only implementation, and it’s not research

- You think that’s interesting, but we don’t. It’s not intersting in CS.

- You don’t have a way to establish it, and they don’t think that counts (as a dissertation in CS). Specifically, I wrote: “You don’t have a way to establish it taht they don’t think counts”

- One way to get around (or temper) a professor who wants a rigorous mathematical proof (of lower bound or something) is to have empirical evidence:

- Create an empirical framework (mutliple families of graphs) and carry out the measurements over these families of graphs and compare them

- Need to worry about the synthetic data having the results we’re looking for baked in. So, we’ll need to craft synthetic graphs carefully.

- We’d want to make sure that the families of graphs are not skewed to our metrics/measures, but are a good representative sample (like the k-p graphs of the axiom paper?)

- This might be hard with real-world data, and simpler with synthetic data.

- Reasonings for ensuring a choice of synthetic data design:

- It’s current best practice (or state of the art)

- or, theoretically, it’s good to do this because ___.

Notes

- Were there sematics to Tang (et al)’s use of in their definition? (Ie that up to edges could be traversed in each snapshot of the graph)

- Why did they do it? Why did they choose ?

- What does it mean semantically? (this is beyond computationally). Is it something where they assumed that a snapshot was “long enough” to traverse edges during that time? What is defined to be in there examples?

- They chose h as the horizon to model the speed of messages passed compared to the length of the time window. So, it allows for multiple events to happen within one snapshot to cover when the snapshots are too coarse-grained. For their example grapn–Enron emails–they use a time interval of 1 day and horizon h=1. That is likely unrealistic: 1 email per day.

- Why is clustering coefficient important over various s? If we take multiple different sized time-neighborhoods around and flatten the graph over that interval, what does that mean? What does that say about the graph around ? Is there anything we can get out of it for dynamics?

- If we look over the differnt distributions of the clustering coefficient, what is it trying to tell us?

- Also, which collapsing / flattening scheme are you using at that point? Does it matter?

- Really, we’re harking on the dynamics of the graph and understanding/characterizing the graph

- If we take a distribution of a metric, we should classify it. Ex: clustering coefficient for sliding window over time. That would give us a distribution of CC for each point in time. What does that look like?

- Could be discribed by family: Gaussian, Laplacian, Viral, other…

- So, it could be something like: This TIVG falls into class of distribution with peak value with regards to clustering coefficient

- We have this immediate similarity to other applications (like the imaging/vision Gaussian pyramid)

- We really need to look at the semantic connections! What does this measure mean, semantically?

- See hand-drawn notes for more