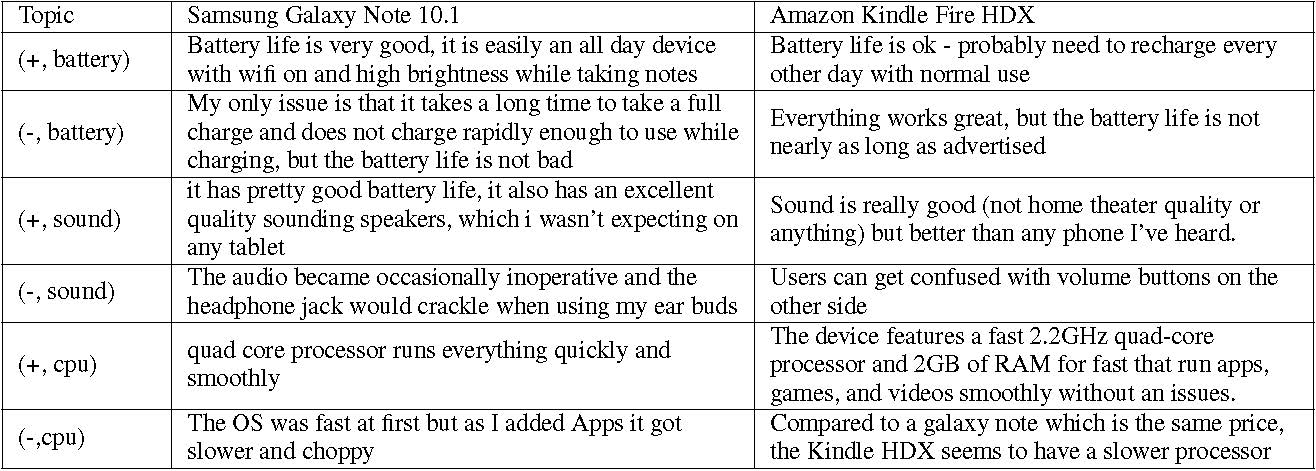

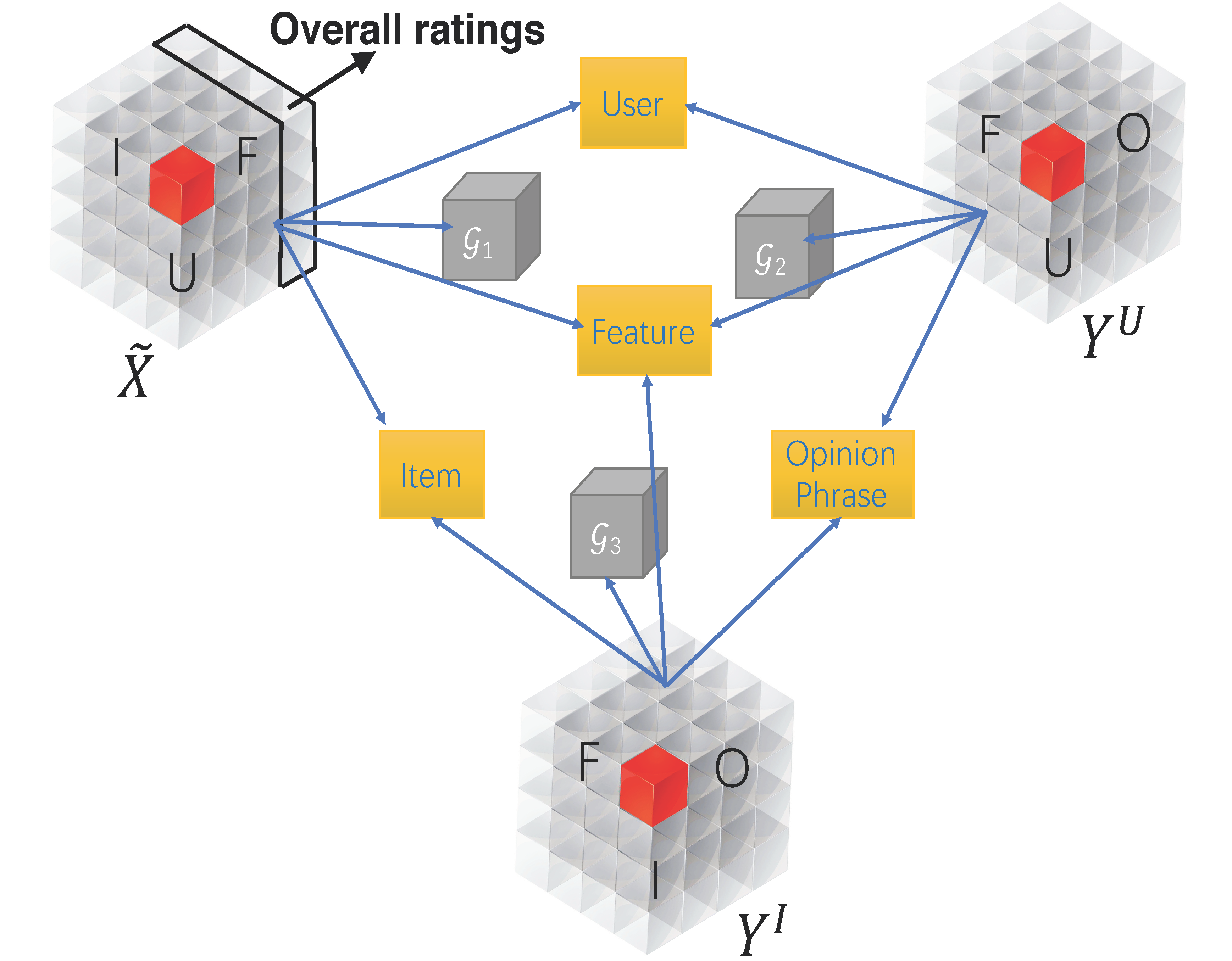

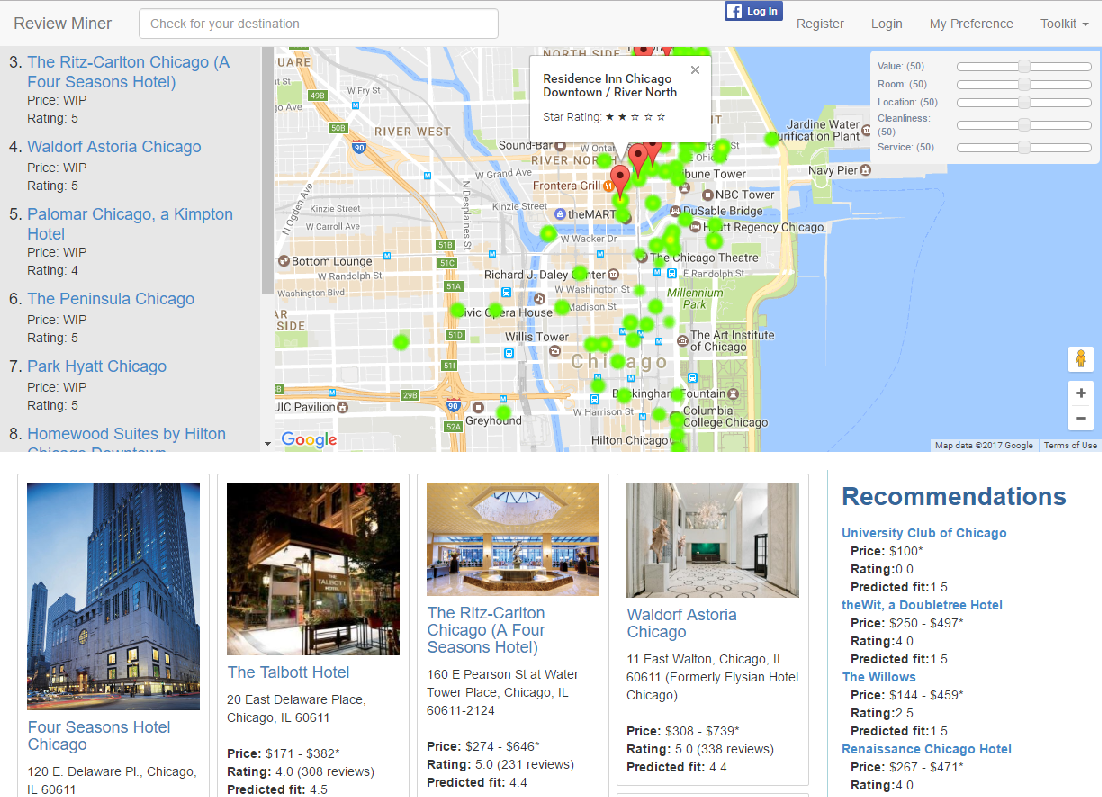

Summary: Explaining automatically generated recommendations allows users to make more informed and accurate decisions about which results to utilize, and therefore improves their satisfaction. In this work, we develop a multi-task learning solution for explainable recommendation. Two companion learning tasks of

user preference modeling for recommendation and

opinionated content modeling for explanation are integrated via a joint tensor factorization. As a result, the algorithm predicts not only a user's preference over a list of items, i.e., recommendation, but also how the user would appreciate a particular item at the feature level, i.e., opinionated textual explanation.

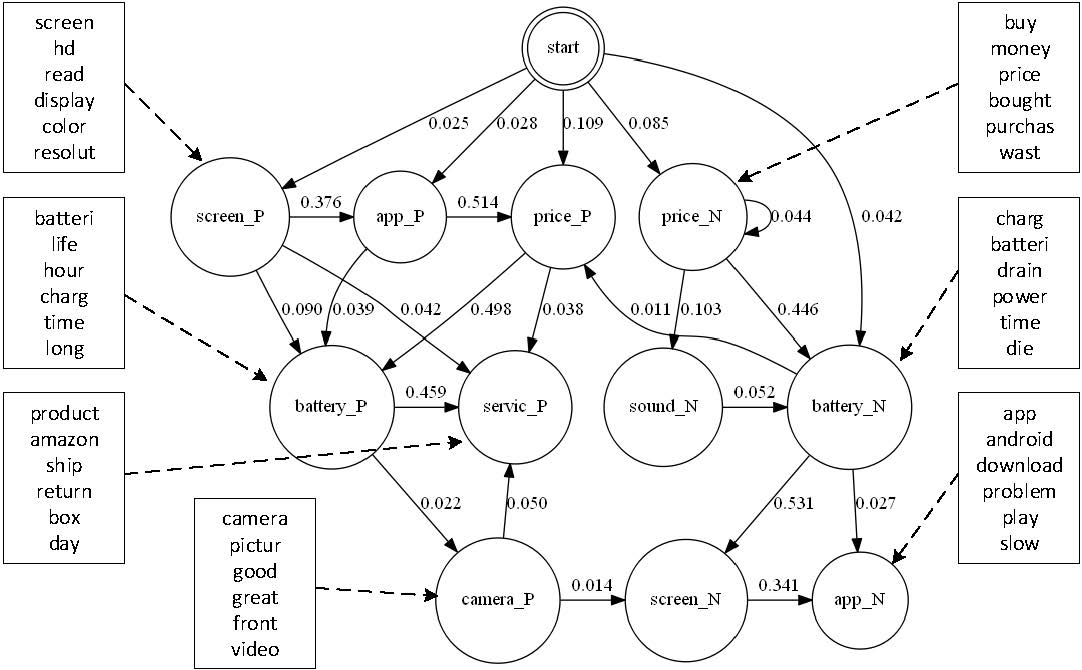

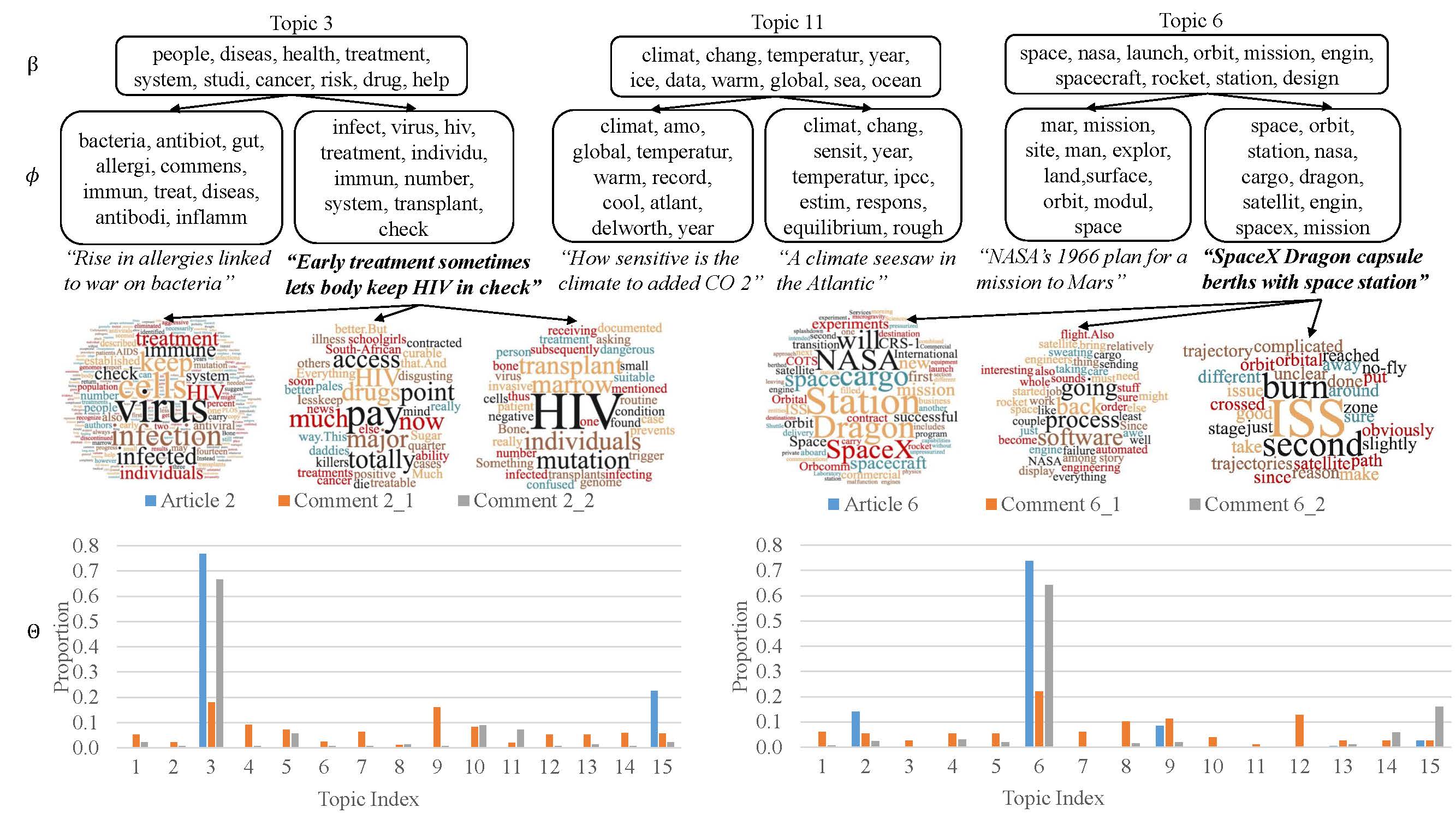

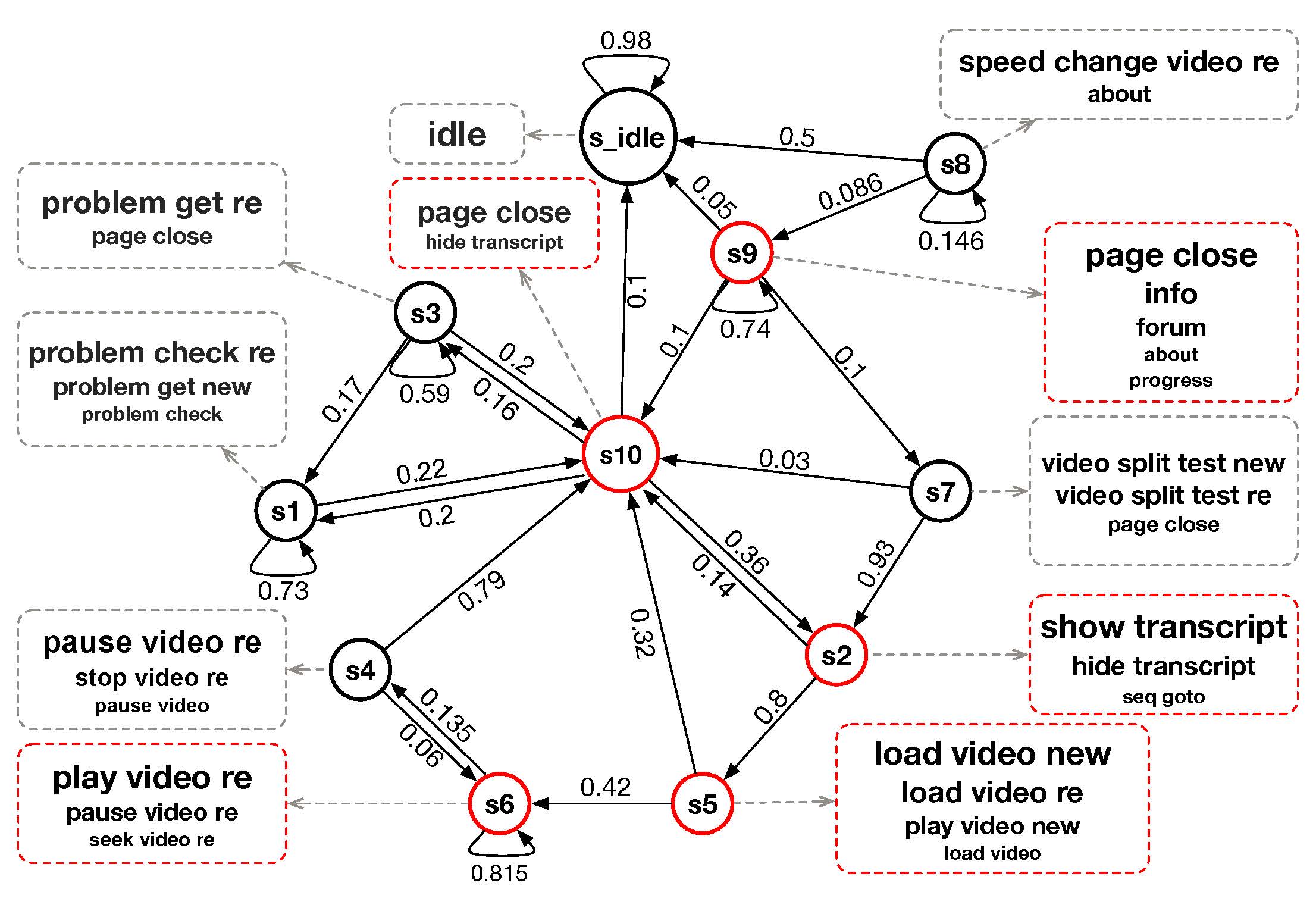

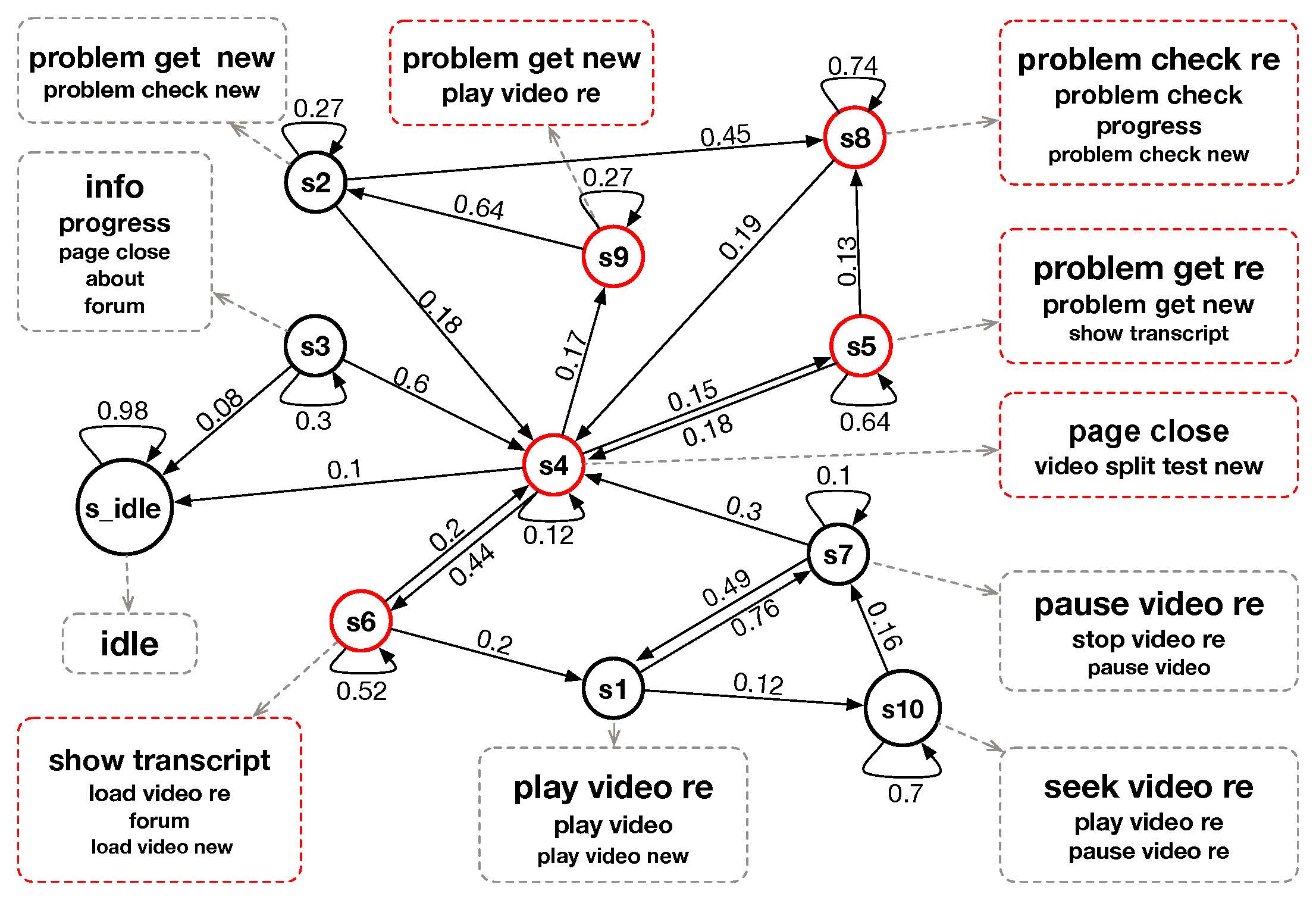

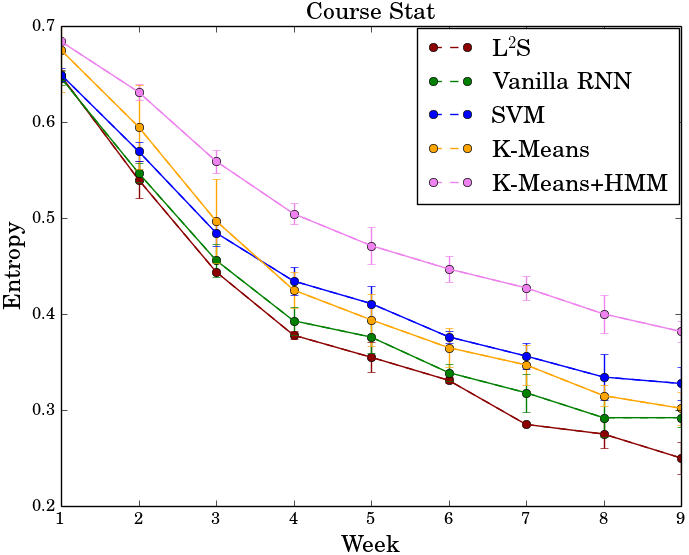

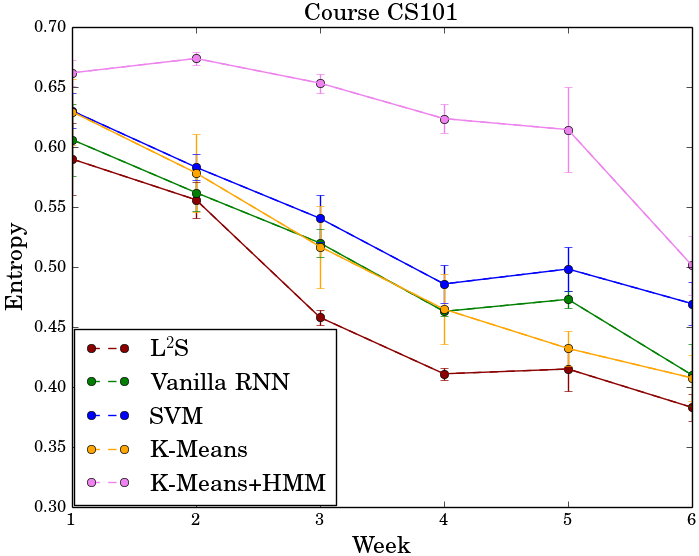

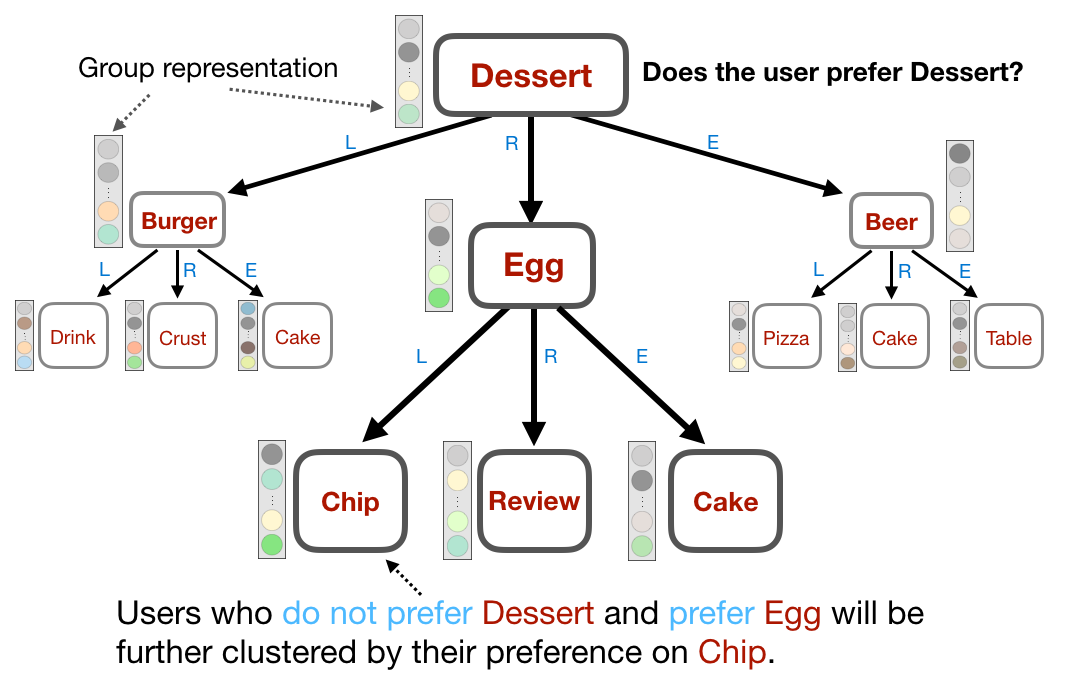

Later, we integrate regression trees to guide the learning of latent factor models for recommendation, and use the learnt tree structure to explain the resulting latent factors. Specifically, we build regression trees on users and items respectively with user-generated reviews, and associate a latent profile to each node on the trees to represent users and items. With the growth of regression tree, the latent factors are gradually refined under the regularization imposed by the tree structure. As a result, we are able to track the creation of latent profiles by looking into the path of each factor on regression trees, which thus serves as an explanation for the resulting recommendations.

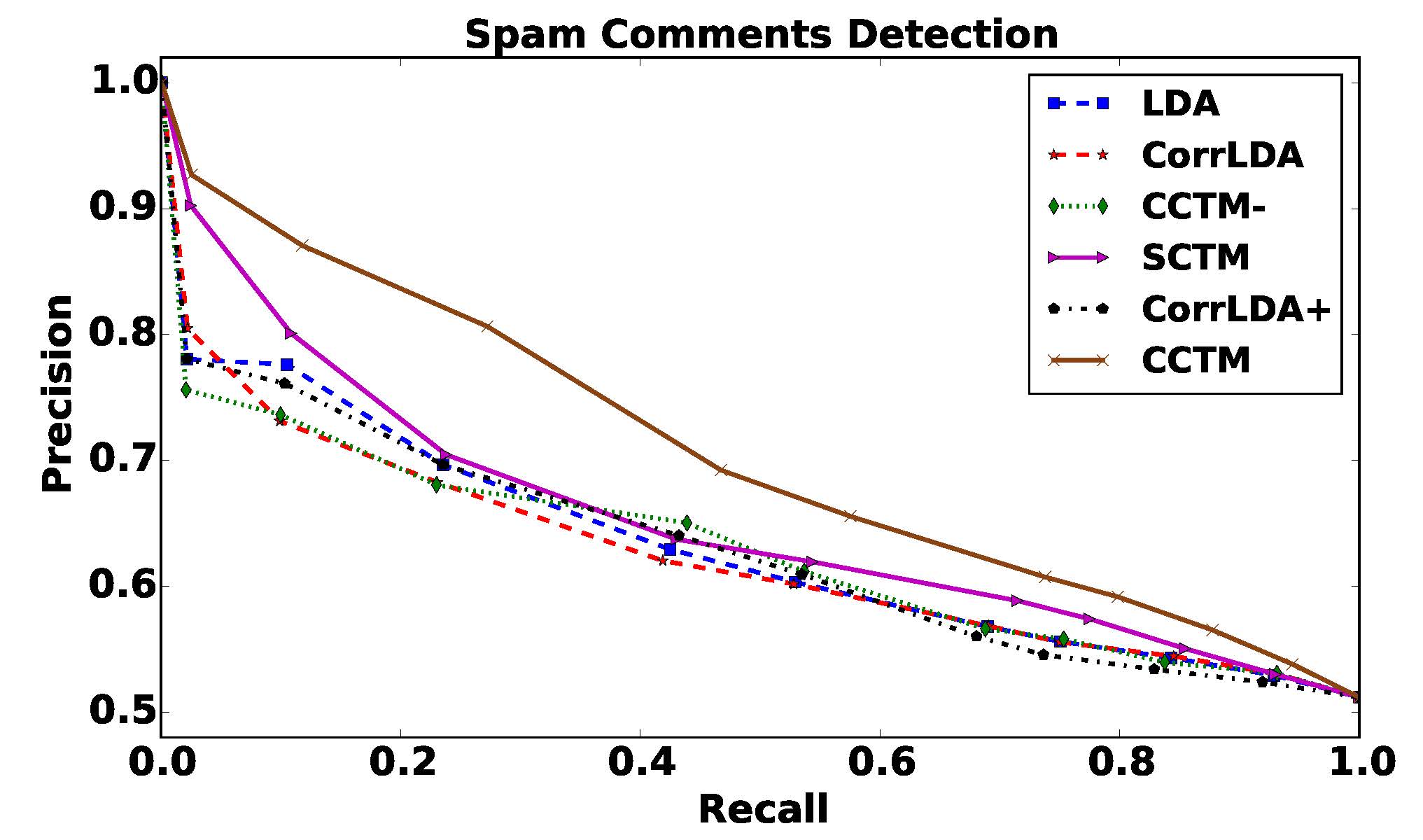

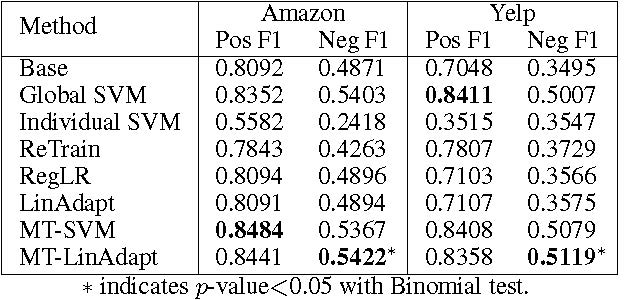

Extensive experiments on two large collections of Amazon and Yelp reviews demonstrate the advantage of our model over several competitive baseline algorithms. Besides, our extensive user study also confirms the practical value of explainable recommendations generated by our model.

Result Highlights:

Dissemination:

-

Publication:

-

Yiyi Tao, Yiling Jia, Nan Wang and Hongning Wang. The FacT: Taming Latent Factor Models for Explainability with Factorization Trees. The 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 2019), p295-304, 2019. (PDF)

-

Nan Wang, Yiling Jia, Yue Yin and Hongning Wang. Explainable Recommendation via Multi-Task Learning in Opinionated Text Data. The 41st International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 2018), p165-174, 2018. (PDF)

-

Code:

-

A Python Implementation of our factorization tree algorithm for explainable recommendation can be found here.